This is the first of the Holiday Thought Leader Interview series. I interviewed several thought leaders to get their perspectives on various eDiscovery topics.

Today’s thought leader is Jason R. Baron. Jason has served as the National Archives' Director of Litigation since May 2000 and has been involved in high-profile cases for the federal government. His background in eDiscovery dates to the Reagan Administration, when he helped retain backup tapes containing Iran-Contra records from the National Security Council as the Justice Department’s lead counsel. Later, as director of litigation for the U.S. National Archives and Records Administration, Jason was assigned a request to review documents pertaining to tobacco litigation in U.S. v. Philip Morris.

He currently serves as The Sedona Conference Co-Chair of the Working Group on Electronic Document Retention and Production. Baron is also one of the founding coordinators of the TREC Legal Track, a search project organized through the National Institute of Standards and Technology to evaluate search protocols used in eDiscovery. This year, Jason was awarded the Emmett Leahy Award for Outstanding Contributions and Accomplishments in the Records and Information Management Profession.

You were recently awarded the prestigious Emmett Leahy Award for excellence in records management. Is it unusual that a lawyer wins such an award? Or is the job of the litigator and records manager becoming inextricably linked?

Yes, it was unusual: I am the first federal lawyer to win the Emmett Leahy award, and only the second lawyer to have done so in the 40-odd years that the award has been given out. But my career path in the federal government has been a bit unusual as well: I spent seven years working as lead counsel on the original White House PROFS email case (Armstrong v. EOP), followed by more than a decade worrying about records-related matters for the government as Director of Litigation at NARA. So with respect to records and information management, I long ago passed at least the Malcolm Gladwell test in "Outliers" where he says one needs to spend 10,000 hours working on anything to develop a level of "expertise." As to the second part of your question, I absolutely believe that to be a good litigation attorney these days one needs to know something about information management and eDiscovery — since all evidence is "born digital" and lots of it needs to be searched for electronically. As you know, I also have been a longtime advocate of a greater linking between the fields of information retrieval and eDiscovery.

In your acceptance speech you spoke about the dangers of information overload and the possibility that it will make it difficult for people to find important information. How optimistic that we can avoid this dystopian future? How can the legal profession help the world avoid this fate?

What I said was that in a world of greater and greater retention of electronically stored information, we need to leverage artificial intelligence and specifically better search algorithms to keep up in this particular information arms race. Although Ralph Losey teased me in a recent blog post that I was being unduly negative about future information dystopias, I actually am very optimistic about the future of search technology assisting in triaging the important from the ephemeral in vast collections of archives. We can achieve this through greater use of auto-categorization and search filtering methods, as well as a having a better ability in the future to conduct meaningful searches across the enterprise (whether in the cloud or not). Lawyers can certainly advise their clients how to practice good information governance to accomplish these aims.

You were one of the founders of the TREC Legal Track research project. What do you consider that project’s achievement at this point?

The initial idea for the TREC Legal Track was to get a better handle on evaluating various types of alternative search methods and technologies, to compare them against a "baseline" of how effective lawyers were in relying on more basic forms of keyword searching. The initial results were a wake-up call, in showing lawyers that sole reliance on simple keywords and Boolean strings sometimes results in a large quantity of relevant evidence going missing. But during the half-decade of research that now has gone into the track, something else of perhaps even greater importance has emerged from the results, namely: we have a much better understanding now of what a good search process looks like, which includes a human in the loop (known in the Legal Track as a topic authority) evaluating on an ongoing, iterative basis what automated search software kicks out by way of initial results. The biggest achievement however may simply be the continued existence of the TREC Legal Track itself, still going in its 6th year in 2011, and still producing important research results, on an open, non-proprietary platform, that are fully reproducible and that benefit both the legal profession as well as the information retrieval academic world. While I stepped away after 4 years from further active involvement in the Legal Track as a coordinator, I continue to be highly impressed with the work of the current track coordinators, led by Professor Doug Oard at the University of Maryland, who was remained at the helm since the very beginning.

To what extent has TREC’s research proven the reliability of computer-assisted review in litigation? Is there a danger that the profession assumes the reliability of computer-assisted review is a settled matter?

The TREC Legal Track results I am most familiar with through calendar year 2010 have shown computer-assisted review methods finding in some cases on the order of 85% of relevant documents (a .85 recall rate) per topic while only producing 10% false positives (a .90 precision rate). Not all search methods have had these results, and there has been in fact a wide variance in success achieved, but these returns are very promising when compared with historically lower rates of recall and precision across many information retrieval studies. So the success demonstrated to date is highly encouraging. Coupled with these results has been additional research reported by Maura Grossman & Gordon Cormack, in their much-cited paper Technology-Assisted Review in EDiscovery Can Be More Effective and More Efficient Than Exhaustive Manual Review, which makes the case for the greater accuracy and efficiency of computer-assisted review methods.

Other research conducted outside of TREC, most notably by Herbert Roitblat, Patrick Oot and Anne Kershaw, also point in a similar direction (as reported in their article Mandating Reasonableness in a Reasonable Inquiry). All of these research efforts buttress the defensibility of technology-assisted review methods in actual litigation, in the event of future challenges. Having said this, I do agree that we are still in the early days of using many of the newer predictive types of automated search methods, and I would be concerned about courts simply taking on faith the results of past research as being applicable in all legal settings. There is no question however that the use of predictive analytics, clustering algorithms, and seed sets as part of technology-assisted review methods is saving law firms money and time in performing early case assessment and for multiple other purposes, as reported in a range of eDiscovery conferences and venues — and I of course support all of these good efforts.

You have discussed the need for industry standards in eDiscovery. What benefit would standards provide?

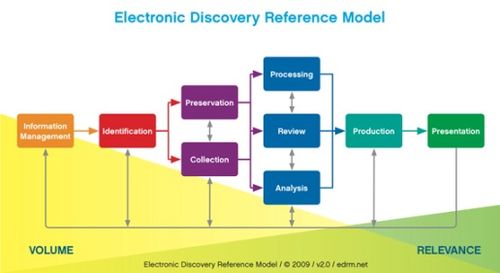

Ever since I served as Co-Editor in Chief on The Sedona Conference Commentary on Achieving Quality in eDiscovery (2009), I have been thinking that the process for conducting good eDiscovery. That paper focused on project management, sampling, and imposing various forms of quality controls on collection, review, and production. The question is, is a good eDiscovery process capable of being fit into a maturity model of sorts, and might be useful to consider whether vendors and law firms would benefit from having their in-house eDiscovery processes audited and certified as meeting some common baseline of quality? To this end, the DESI IV workshop ("Discovery of ESI") held in Pittsburgh last June, as part of the Thirteenth International AI and Law Conference (ICAIL 2011), had as its theme exploring what types of model standards could be imposed on the eDiscovery discipline, so that we all would be able to work from some common set of benchmarks, Some 75 people attended and 20-odd papers were presented. I believe the consensus in the room was that we should be pursuing further discussions as to what an ISO 9001-type quality standard would look like as applied to the specific eDiscovery sector, much as other industry verticals have their own ISO standards for quality. Since June, I have been in touch with some eDiscovery vendors have actually undergone an audit process to achieve ISO 9001 certification. This is an area where no consensus has yet emerged as to the path forward — but I will be pursuing further discussions with DESI workshop attendees in the coming months and promise to report back in this space as to what comes of these efforts.

What sort of standards would benefit the industry? Do we need standards for pieces of the eDiscovery process, like a defensible search standard, or are you talking about a broad quality assurance process?

DESI IV started by concentrating on what would constitute a defensible search standard; however, it became clear at the workshop and over the course of the past few months that we need to think bigger, in looking across the eDiscovery life cycle as to what constitutes best practices through automation and other means. We need to remember however that eDiscovery is a very young discipline, as we're only five years out from the 2006 Rules Amendments. I don't have all the answers, by any means, on what would constitute an acceptable set of standards, but I like to ask questions and believe in a process of continuous, lifelong learning. As I said, I promise I'll let you know about what success has been achieved in this space.

Thanks, Jason, for participating in the interview!

And to the readers, as always, please share any comments you might have or if you’d like to know more about a particular topic!