eDiscovery Tips: SaaS and eDiscovery – Top Considerations

There was an interesting article this week regarding Software as a Service (SaaS) and eDiscovery entitled Top 7 Legal Things to Know about Cloud, SaaS and eDiscovery on CIO Update.com, written by David Morris and James Shook from EMC. The article, which relates to storage of ESI within cloud and SaaS providers, can be found here.

The authors note that “[p]roponents of the cloud compare it to the shift in electrical power generation at the turn of the century [1900’s], where companies had to generate their own electric power to run factories. Leveraging expertise and economies of scale, electric companies soon emerged and began delivering on-demand electricity at an unmatched cost point and service level.”, which is what cloud components argue that the SaaS model is doing for IT services.

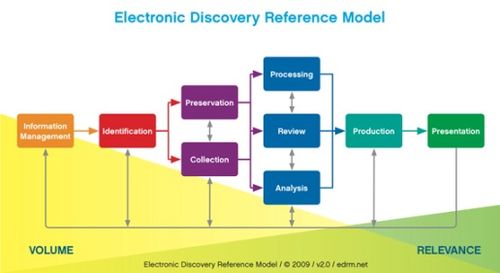

However, the decision to move to SaaS solutions for IT services doesn’t just affect IT – there are compliance and legal considerations to consider as well. Because the parties to a case have a duty to identify, preserve and produce relevant electronically stored information (ESI), information for those parties stored in a cloud infrastructure or SaaS application is subject to those same requirements, even though it isn’t necessarily in their total control. With that in mind, the article looks at key eDiscovery issues that must be addressed for organizations using public cloud and SaaS offerings for ESI, as follows (requirements in bold are quoted directly from the article):

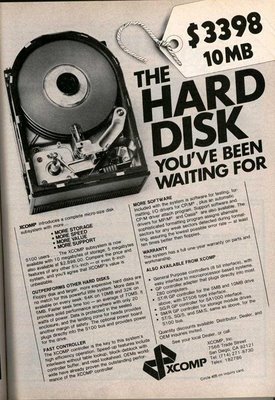

- Where is ESI actually located when it is in the ethereal cloud or SaaS application? It’s important to know where your data is actually stored. Because SaaS providers are expected to deliver data on demand at any time, they may store your data in more than one data center for redundancy purposes. Data centers could be located outside of the US, so different compliance and privacy requirements may come into play if there is a need to produce data from these locations.

- What are the legal implications of e-discovery in the cloud? Little case law exists on the subject, but it is expected that the responsibility for timely preservation, collection and production of the data remains with the organization at party in the lawsuit, even though that data may be in direct control of the cloud provider.

- What happens if a lawsuit is in the US but one company’s headquarters is in another country? Or what if the data is in a country where the privacy rules are different? The article references one case – AccessData Corp. v. ALSTE Technologies GMBH , 2010 WL 318477 (D. Utah Jan. 21, 2010) – where the German company ALSTE cited German privacy laws as preventing it from collecting relevant company emails that were located in Germany (the US court compelled production anyway). So, jurisdictional factors can come into play when cloud data is housed in a foreign jurisdiction.

This is too big a topic to cover in one post, so we’ll cover the other four eDiscovery issues to address in Monday’s post. Let the anticipation build!

So, what do you think? Does your company have ESI hosted in the cloud? Please share any comments you might have or if you’d like to know more about a particular topic.