This is the second of our Holiday Thought Leader Interview series. I interviewed several thought leaders to get their perspectives on various eDiscovery topics.

Today's thought leader is Bennett B. Borden. Bennett is the co-chair of Williams Mullen’s eDiscovery and Information Governance Section. Based in Richmond, Va., his practice is focused on Electronic Discovery and Information Law. He has published several papers on the use of predictive coding in litigation. Bennett is not only an advocate for predictive coding in review, but has reorganized his own litigation team to more effectively use advanced computer technology to improve eDiscovery.

You have written extensively about the ways that the traditional, or linear review process is broken. Most of our readers understand the issue, but how well has the profession at large grappled with this? Are the problems well understood?

The problem with the expense of document review is well understood, but how to solve it is less well known. Fortunately, there is some great research being done by both academics and practitioners that is helping shed light on both the problem and the solution. In addition to the research we’ve written about in The Demise of Linear Review and Why Document Review is Broken, some very informative research has come out of the TREC Legal Track and subsequent papers by Maura R. Grossman and Gordon V. Cormack, as well as by Jason R. Baron, the eDiscovery Institute, Douglas W. Oard and Herbert L. Roitblat, among others. Because of this important research, the eDiscovery bar is becoming increasingly aware of how document review and, more importantly, fact development can be more effective and less costly through the use of advanced technology and artful strategy.

You are a proponent of computer-assisted review- is computer search technology truly mature? Is it a defensible strategy for review?

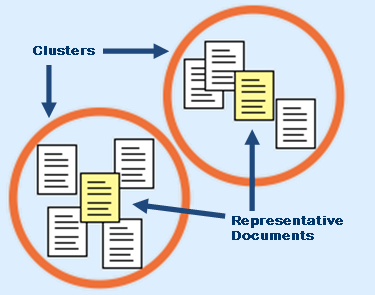

Absolutely. In fact, I would argue that computer-assisted review is actually more defensible than traditional linear review. By computer-assisted review, I mean the utilization of advanced search technologies beyond mere search terms (e.g., topic modeling, clustering, meaning-based search, predictive coding, latent semantic analysis, probabilistic latent semantic analysis, Bayesian probability) to more intelligently address a data set. These technologies, to a greater or lesser extent, group documents based upon similarities, which allows a reviewer to address the same kinds of documents in the same way.

Computers are vastly superior to humans in quickly finding similarities (and dissimilarities) within data. And, the similarities that computers are able to find have advanced beyond mere content (through search terms) to include many other aspects of data, such as correspondents, domains, dates, times, location, communication patterns, etc. Because the technology can now recognize and address all of these aspects of data, the resulting groupings of documents is more granular and internally cohesive. This means that the reviewer makes fewer and more consistent choices across similar documents, leading to a faster, cheaper, better and more defensible review.

How has the use of [computer-assisted review] predictive coding changed the way you tackle a case? Does it let you deploy your resources in new ways?

I have significantly changed how I address a case as both technology and the law have advanced. Although there is a vast amount of data that might be discoverable in a particular case, less than 1 percent of that data is ever used in the case or truly advances its resolution. The resources I deploy focus on identifying that 1 percent, and avoiding the burden and expense largely wasted on the 99 percent. Part of this is done through developing, negotiating and obtaining reasonable and iterative eDiscovery protocols that focus on the critical data first. EDiscovery law has developed at a rapid pace and provides the tools to develop and defend these kinds of protocols. An important part of these protocols is the effective use of computer-assisted review.

Lately there has been a lot of attention given to the idea that computer-assisted review will replace attorneys in litigation. How much truth is there to that idea? How will computer-assisted review affect the role of attorneys?

Technology improves productivity, reducing the time required to accomplish a task. This is no less true of computer-assisted review. The 2006 amendments to the Federal Rules of Civil Procedure caused a massive increase in the number of attorneys devoted to the review of documents. As search technology and the review tools that employ them continue to improve, the demand for attorneys devoted to review will obviously decline.

But this is not a bad thing. Traditional linear document review is horrifically tedious and boring, and it is not the best use of legal education and experience. Fundamentally, litigators develop facts and apply the law to those facts to determine a client’s position to advise them to act accordingly. Computer-assisted review allows us to get at the most relevant facts more quickly, reducing both the scope and duration of litigation. This is what lawyers should be focused on accomplishing, and computer-assisted review can help them do so.

With the rise of computer-assisted review, do lawyers need to learn new skills? Do lawyers need to be computer scientists or statisticians to play a role?

Lawyers do not need to be computer scientists or statisticians, but they certainly need to have a good understanding of how information is created, how it is stored, and how to get at it. In fact, lawyers who do not have this understanding, whether alone or in conjunction with advisory staff, are simply not serving their clients competently.

You’ve suggested that lawyers involved in computer-assisted review enjoy the work more than in the traditional manual review process. Why do you think that is?

I think it is because the lawyers are using their legal expertise to pursue lines of investigation and develop the facts surrounding them, as opposed to simply playing a monotonous game of memory match. Our strategy of review is to use very talented lawyers to address a data set using technological and strategic means to get to the facts that matter. While doing so our lawyers uncover meaning within a huge volume of information and weave it into a story that resolves the matter. This is exciting and meaningful work that has had significant impact on our clients’ litigation budgets.

How is computer assisted review changing the competitive landscape? Does it provide an opportunity for small firms to compete that maybe didn’t exist a few years ago?

We live in the information age, and lawyers, especially litigators, fundamentally deal in information. In this age it is easier than ever to get to the facts that matter, because more facts (and more granular facts) exist within electronic information. The lawyer who knows how to get at the facts that matter is simply a more effective lawyer. The information age has fundamentally changed the competitive landscape. Small companies are able to achieve immense success through the skillful application of technology. The same is true of law firms. Smaller firms that consciously develop and nimbly utilize the technological advantages available to them have every opportunity to excel, perhaps even more so than larger, highly-leveraged firms. It is no longer about size and head-count, it’s about knowing how to get at the facts that matter, and winning cases by doing so.

Thanks, Bennett, for participating in the interview!

And to the readers, as always, please share any comments you might have or if you’d like to know more about a particular topic!