eDiscovery Daily Blog

A Model for Reducing Private Data – eDiscovery Best Practices

Since the Electronic Discovery Reference Model (EDRM) annual meeting just four short months ago in May, several EDRM projects (Metrics, Jobs, Data Set and the new Native Files project) have already announced new deliverables and/or requested feedback. Now, the Data Set project has announced another new deliverable – a new Privacy Risk Reduction Model.

Announced in yesterday’s press release, the new model “is a process for reducing the volume of private, protected and risky data by using a series of steps applied in sequence as part of the information management, identification, preservation and collection phases” of the EDRM. It “is used prior to producing or exporting data containing risky information such as privileged or proprietary information.”

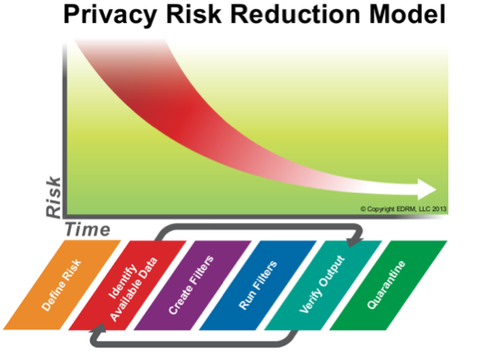

The model uses a series of six steps applied in sequence with the middle four steps being performed as an iterative process until the amount of private information is reduced to a desirable level. Here are the steps as described on the EDRM site:

- Define Risk: Risk is initially identified by an organization by stakeholders who can quantify the specific risks a particular class or type of data may pose. For example, risky data may include personally identifiable information (PII) such as credit card numbers, attorney-client privileged communications or trade secrets.

- Identify Available Data: Locations and types of risky data should be identified. Possible locations may include email repositories, backups, email and data archives, file shares, individual workstations and laptops, and portable storage devices. The quantity and type should also be specified.

- Create Filters: Search methods and filters are created to ‘catch’ risky data. They may include keyword, data range, file type, subject line etc.

- Run Filters: The filters are executed and the results evaluated for accuracy.

- Verify Output: The data identified or captured by the filters is compared against the anticipated output. If the filters did not catch all the expected risky data, additional filters can be created or existing filters can be refined and the process run again. Additionally, the output from the filters may identify additional risky data or data sources in which case this new data should be subjected the risk reduction process.

- Quarantine: After an acceptable amount of risky data has been identified through the process, it should be quarantined from the original data sets. This may be done through migration of non-risky data, or through extraction or deletion of the risky data from the original data set.

No EDRM model would be complete without a handy graphic to illustrate the process so, as you can see above, this model includes one that illustrates the steps as well as the risk-time continuum (not to be confused with the space-time continuum, relatively speaking)… 😉

Looks like a sound process, it will be interesting to see it in use. Hopefully, it will enable the Data Set team to avoid some of the “controversy” experienced during the process of removing private data from the Enron data set. Kudos to the Data Set team, including project co-leaders Michael Lappin, director of archiving strategy at Nuix, and Eric Robi, president of Elluma Discovery!

So, what do you think? What do you think of the process? Please share any comments you might have or if you’d like to know more about a particular topic.

Disclaimer: The views represented herein are exclusively the views of the author, and do not necessarily represent the views held by CloudNine Discovery. eDiscoveryDaily is made available by CloudNine Discovery solely for educational purposes to provide general information about general eDiscovery principles and not to provide specific legal advice applicable to any particular circumstance. eDiscoveryDaily should not be used as a substitute for competent legal advice from a lawyer you have retained and who has agreed to represent you.