This is the second of the 2016 LegalTech New York (LTNY) Thought Leader Interview series. eDiscovery Daily interviewed several thought leaders at LTNY this year to get their observations regarding trends at the show and generally within the eDiscovery industry. Unlike previous years, some of the questions posed to each thought leader were tailored to their position in the industry, so we have dispensed with the standard questions we normally ask all thought leaders.

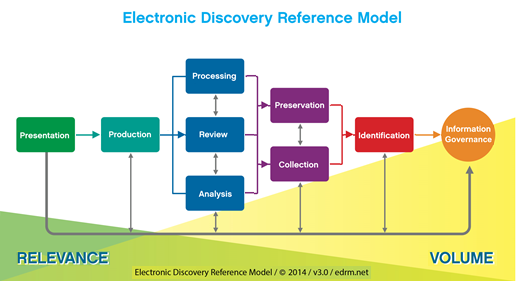

Today’s thought leader is George Socha. A litigator for 16 years, George is President of Socha Consulting LLC, offering services as an electronic discovery expert witness, special master and advisor to corporations, law firms and their clients, and legal vertical market software and service providers in the areas of electronic discovery and automated litigation support. George has also been co-author of the leading survey on the electronic discovery market, The Socha-Gelbmann Electronic Discovery Survey; in 2011, he and Tom Gelbmann converted the Survey into Apersee, an online system for selecting eDiscovery providers and their offerings. In 2005, he and Tom Gelbmann launched the Electronic Discovery Reference Model project to establish standards within the eDiscovery industry – today, the EDRM model has become a standard in the industry for the eDiscovery life cycle and there are nine active projects with over 300 members from 81 participating organizations. George has a J.D. for Cornell Law School and a B.A. from the University of Wisconsin – Madison.

What are your general observations about LTNY this year and about emerging eDiscovery trends overall?

{Interviewed the first morning of LTNY, so the focus of the question to George was more about his expectations for the show and also about general industry trends}.

This is the largest legal technology trade show of the year so it’s going to be a “who’s who” of people in the hallways. It will be an opportunity for service and software providers to roll out their new “fill in the blank”. It will be great to catch up with folks that I only get to see once a year as well as folks that I get to see a lot more than that. And, yet again, I don’t expect any dramatic revelations on the exhibit floor or in any of the sessions.

We continue to hear two recurring themes: the market is consolidating and eDiscovery has become a commodity. I still don’t see either of these actually happening. Consolidation would be if some providers were acquiring others and no new providers were coming along to fill in the gaps, or if a small number of providers was taking over a huge share of the market. Instead, as quickly as one provider acquires another, two, three or more new providers pop up and often with new ideas they hope will gain traction. In terms of dominating the market, there has been some consolidation on the software side but as to services provider the market continues to look more like law firms than like accounting firms.

In terms of commoditization, I think we still have a market where people want to pay “K-mart, off the rack” prices for “Bespoke” suits. That reflects continued intense downward pressure on prices. It does not suggest, however, that the e-discovery market has begun to approximate, for example, the markets for corn, oil or generic goods. E-discovery services and software are not yet fungible – with little meaningful difference between them other than price. I have heard no discussion of “e-discovery futures.” And providers and consumers alike still seem to think that brand, levels of project management, and variations in depth and breadth of offerings matter considerably.

Given that analytics happens at various places throughout the eDiscovery life cycle, is it time to consider tweaking the EDRM model to reflect a broader scope of analysis?

The question always is, “what should the tweak look like?” The questions I ask in return are “What’s there that should not be there?”, “What should be there that is not?” and “What should be re-arranged?” One common category of suggested tweaks are the ones meant to change the EDRM model to look more like one particular person’s or organization’s workflow. This keeps coming up even though the model was never meant to be a workflow – it is a conceptual framework to help break one unitary item into a set of more discrete components that you can examine in comparison to each other and also in isolation.

A second set of tweaks focuses on adding more boxes to the diagram. Why, we get asked, don’t we have a box called Early Case Assessment, and another called Legal Hold, and another called Predictive Coding, and so on. With activities like analytics, you can take the entire EDRM diagram and drop it inside any one of those boxes or in that circle. Those concepts already are present in the current diagram. If, for example, you took the entire EDRM diagram and dropped it inside the Identification box, you could call that Early Case Assessment or Early Data Assessment. There was discussion early on about whether there should be a box for “Search”, but Search is really an Analysis function – there’s a home for it there.

A third set of suggested tweaks centers on eliminating elements from the diagram. Some have proposed that we combine the processing and review boxes into a single box – but the rationale they offer is that because they offer both those capabilities there no longer is a need to show separate boxes for the separate functions.

What are you working on that you’d like our readers to know about?

First, we would like to invite current and prospective members to join us on April 18 for our Spring meeting which will be at the ACEDS conference this year. The conference is from April 18 through April 20, with the educational portion of the conference slated for the 19th and 20th.

For several years at the conference, ACEDS has given out awards honoring eDiscovery professionals. To congratulate this year’s winners we will be giving them one-year individual EDRM memberships.

On the project side, one of the undertakings we are working on is “SEAT-1,” a follow up to our eMSAT-1 (eDiscovery Maturity Self-Assessment Test). SEAT-1 will be a self-assessment test specifically for litigation groups and law firms. The test is intended to enable them to better assess where they are at, how they are doing and where they want to be. We are also working on different ways to deliver our budget calculators. It’s too early to provide details on that, but we’re hoping to be able to provide more information soon.

Finally, in the past year we have begun to develop and deliver member-only resources. We published a data set for members only and we put a new section of EDRM site with information about the changes to the Federal rules, including a comprehensive collection of information about the changes to the rules. This year, we will be working on additional resources to be available to just our members.

Thanks, George, for participating in the interview!

And to the readers, as always, please share any comments you might have or if you’d like to know more about a particular topic!

Disclaimer: The views represented herein are exclusively the views of the author, and do not necessarily represent the views held by CloudNine. eDiscovery Daily is made available by CloudNine solely for educational purposes to provide general information about general eDiscovery principles and not to provide specific legal advice applicable to any particular circumstance. eDiscoveryDaily should not be used as a substitute for competent legal advice from a lawyer you have retained and who has agreed to represent you.