Why Is TAR Like a Bag of M&M’s?, Part Two: eDiscovery Best Practices

Editor’s Note: Tom O’Connor is a nationally known consultant, speaker, and writer in the field of computerized litigation support systems. He has also been a great addition to our webinar program, participating with me on several recent webinars. Tom has also written several terrific informational overview series for CloudNine, including eDiscovery and the GDPR: Ready or Not, Here it Comes (which we covered as a webcast), Understanding eDiscovery in Criminal Cases (which we also covered as a webcast) and ALSP – Not Just Your Daddy’s LPO. Now, Tom has written another terrific overview regarding Technology Assisted Review titled Why Is TAR Like a Bag of M&M’s? that we’re happy to share on the eDiscovery Daily blog. Enjoy! – Doug

Tom’s overview is split into four parts, so we’ll cover each part separately. The first part was covered on Tuesday. Here’s part two.

History and Evolution of Defining TAR

Most people would begin the discussion by agreeing with this framing statement made by Maura Grossman and Gordon Cormack in their seminal article, Technology-Assisted Review in E-Discovery Can Be More Effective and More Efficient Than Exhaustive Manual Review, (XVII RICH. J.L. & TECH. 11 (2011):

Overall, the myth that exhaustive manual review is the most effective—and therefore, the most defensible—approach to document review is strongly refuted. Technology-assisted review can (and does) yield more accurate results than exhaustive manual review, with much lower effort.

A technology-assisted review process may involve, in whole or in part, the use of one or more approaches including, but not limited to, keyword search, Boolean search, conceptual search, clustering, machine learning, relevance ranking, and sampling.

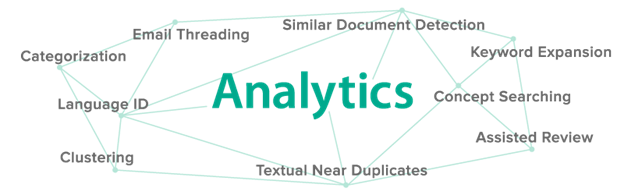

So, TAR began as a process and in the early stage of the discussion, it was common to refer to various TAR tools under the heading “analytics” as illustrated by the graphic below from Relativity.

Copyright © Relativity

That general heading was often divided into two main categories

Structured Analytics

- Email threading

- Near duplicate detection

- Language detection

Conceptual Analytics

- Keyword expansion

- Conceptual clustering

- Categorization

- Predictive Coding

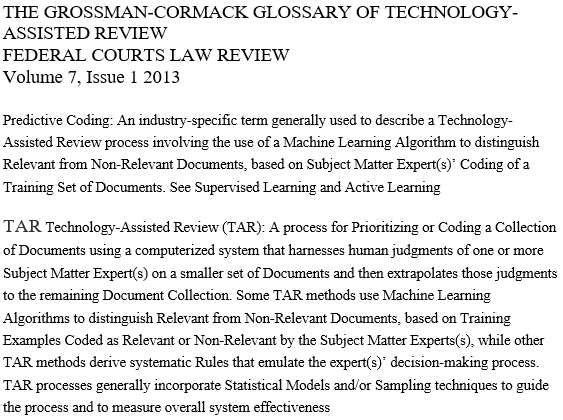

That definition of Predictive Coding as part of the TAR process held for quite some time. In fact, the current EDRM definition of Predictive Coding still refers to it as:

An industry-specific term generally used to describe a Technology-Assisted Review process involving the use of a Machine Learning Algorithm to distinguish Relevant from Non-Relevant Documents, based on a Subject Matter Expert’s Coding of a Training Set of Documents

But before long, the definition began to erode and TAR started to become synonymous with Predictive Coding. Why? For several reasons I believe.

- The Grossman-Cormack glossary of 2013 used the phrase Coding” to define both TAR and PC and I think various parties then conflated the two. (See No. 2 below)

- Continued use of the terms interchangeably. See EG, Ralph Losey’s TARCourse,” where the very beginning of the first chapter states, “We also added a new class on the historical background of the development of predictive coding.” (which is, by the way, an excellent read).

- Any discussion of TAR involves selecting documents using algorithms and most attorneys react to math the way the Wicked Witch of the West reacted to water.

Again, Ralph Losey provides a good example. (I’m not trying to pick on Ralph, he is just such a prolific writer that his examples are everywhere…and deservedly so). He refers to gain curves, x-axis vs y-axis, HorvitsThompson estimators, recall rates, prevalence ranges and my personal favorite “word-based tf-idf tokenization strategy.”

“Danger. Danger. Warning. Will Robinson.”

- Marketing: the simple fact is that some vendors sell predictive coding tools. Why talk about other TAR tools when you don’t make them? Easier to call your tool TAR and leave it at that.

The problem became so acute that by 2015, according to a 2016 ACEDS News Article, Maura Grossman and Gordon Cormack trademarked the terms “Continuous Active Learning” and “CAL”, claiming those terms’ first commercial use on April 11, 2013 and January 15, 2014. In an ACEDS interview earlier in the year, Maura stated that “The primary purpose of our patents is defensive; that is, if we don’t patent our work, someone else will, and that could inhibit us from being able to use it. Similarly, if we don’t protect the marks ‘Continuous Active Learning’ and ‘CAL’ from being diluted or misused, they may go the same route as technology-assisted review and TAR.”

So then, what exactly is TAR? Everyone agrees that manual review is inefficient, but nobody can agree on what software the lawyers should use and how. I still prefer to go back to Maura and Gordon’s original definition. We’re talking about a process, not a product.

TAR isn’t a piece of software. It’s a process that can include many different steps, several pieces of software, and many decisions by the litigation team. Ralph calls it the multi-modal approach: a combination of people and computers to get the best result.

In short, analytics are the individual tools. TAR is the process you use to combine the tools you select. The next consideration, then, is how to make that selection.

We’ll publish Part 3 – Uses for TAR and When to Use or Not Use It – next Tuesday.

So, what do you think? How would you define TAR? And, as always, please share any comments you might have or if you’d like to know more about a particular topic.

Image Copyright © Mars, Incorporated and its Affiliates.

Sponsor: This blog is sponsored by CloudNine, which is a data and legal discovery technology company with proven expertise in simplifying and automating the discovery of data for audits, investigations, and litigation. Used by legal and business customers worldwide including more than 50 of the top 250 Am Law firms and many of the world’s leading corporations, CloudNine’s eDiscovery automation software and services help customers gain insight and intelligence on electronic data.

Disclaimer: The views represented herein are exclusively the views of the author, and do not necessarily represent the views held by CloudNine. eDiscovery Daily is made available by CloudNine solely for educational purposes to provide general information about general eDiscovery principles and not to provide specific legal advice applicable to any particular circumstance. eDiscovery Daily should not be used as a substitute for competent legal advice from a lawyer you have retained and who has agreed to represent you.