Law Firm Partner Says Hourly Billing Model “Makes No Sense” with AI: eDiscovery Trends

Artificial intelligence (AI) is transforming the practice of law and we’ve covered the topic numerous times (with posts here, here and here, among others). And, I’m not even including all of the posts about technology assisted review (TAR). According to one law firm partner at a recent panel discussion, it could even (finally) spell the end of the billable hour.

In Bloomberg Law’s Big Law Business blog (Billable Hour ‘Makes No Sense’ in an AI World, written by Helen Gunnarsson), the author covered a panel discussion at a recent American Bar Association conference, which included Dennis Garcia, an assistant general counsel for Microsoft in Chicago, Kyle Doviken, a lawyer who works for Lex Machina in Austin and Anthony E. Davis, a partner with Hinshaw & Culbertson LLP in New York. The panel was moderated by Bob Ambrogi, a Massachusetts lawyer and blogger (including the LawSites blog, which we’ve frequently referenced on this blog).

Davis showed the audience a slide quoting Andrew Ng, a computer scientist and professor at Stanford University: “If a typical person can do a mental task with less than one second of thought, we can probably automate it using AI either now or in the near future.” AI can “automate expertise,” Davis said. Because software marketed by information and technology companies is increasingly making it unnecessary to ask a lawyer for information regarding statutes, regulations, and requirements, “clients are not going to pay for time,” he said. Instead, he predicted, they will pay for a lawyer’s “judgment, empathy, creativity, adaptability, and emotional intelligence.”

Davis said AI will result in dramatic changes in law firms’ hiring and billing, among other things. The hourly billing model, he said, “makes no sense in a universe where what clients want is judgment.” Law firms should begin to concern themselves not with the degrees or law schools attended by candidates for employment but with whether they are “capable of developing judgment, have good emotional intelligence, and have a technology background so they can be useful” for long enough to make hiring them worthwhile, he said.

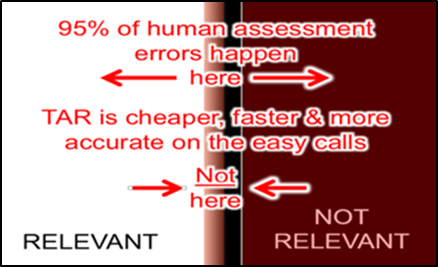

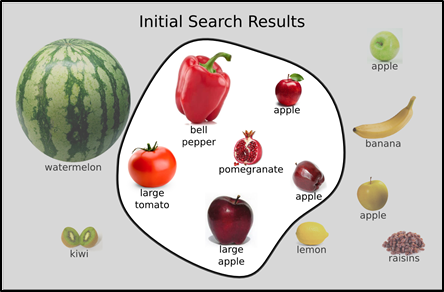

The panelists provided examples of how the use of artificial intelligence can enhance lawyers’ efficiency in areas such as legal research, document review in eDiscovery, drafting and evaluating contracts, evaluating lateral hires and even assessing propensities of federal judges. Doviken indicated that a partner at a large firm had a “hunch” that a certain judge’s rulings favored alumni of the judge’s law school. After reviewing three years’ worth of data, the firm concluded the hunch was valid, assigned a graduate of that law school to a matter pending before that judge, and started winning its motions.

“The next generation of lawyers is going to have to understand how AI works” as part of the duty of competence, said Davis. Want one example of how AI works that you are probably already using? Click here.

So, what do you think? Do you think that AI could spell the end of the billable hour? Please let us know if any comments you might have or if you’d like to know more about a particular topic.

Sponsor: This blog is sponsored by CloudNine, which is a data and legal discovery technology company with proven expertise in simplifying and automating the discovery of data for audits, investigations, and litigation. Used by legal and business customers worldwide including more than 50 of the top 250 Am Law firms and many of the world’s leading corporations, CloudNine’s eDiscovery automation software and services help customers gain insight and intelligence on electronic data.

Disclaimer: The views represented herein are exclusively the views of the author, and do not necessarily represent the views held by CloudNine. eDiscovery Daily is made available by CloudNine solely for educational purposes to provide general information about general eDiscovery principles and not to provide specific legal advice applicable to any particular circumstance. eDiscovery Daily should not be used as a substitute for competent legal advice from a lawyer you have retained and who has agreed to represent you.