eDiscovery Case Law: On the Eve of Trial with Apple, Samsung is Dealt Adverse Inference Sanction

In Apple Inc. v. Samsung Elecs. Co., Case No.: C 11-1846 LHK (PSG) (N.D. Cal.), California Magistrate Judge Paul S. Grewal stated last week that jurors can presume “adverse inference” from Samsung’s automatically deletion of emails that Apple requested in pre-trial discovery.

Two of the world’s dominant smartphone makers are locked into lawsuits against each other all over the globe as they fiercely compete in the exploding mobile handset market. Both multinationals have brought their best weapons available to the game, with Apple asserting a number of technical and design patents along with trade dress rights. Samsung is, in return, asserting their “FRAND” (“Fair, Reasonable and Non-Discriminatory) patents against Apple. The debate rages online about whether a rectangular slab of glass should be able to be patented and whether Samsung is abusing their FRAND patents.

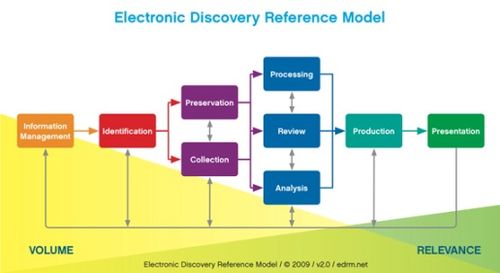

As for this case, Samsung’s proprietary “mySingle” email system is at the center of this discussion. In this web-based system, which Samsung has argued is in line with Korean law, every two weeks any emails not manually saved will automatically be deleted. Unfortunately, failure to turn “off” the auto-delete function resulted in spoliation of evidence as potentially responsive emails were deleted after the duty to preserve began.

Judge Grewal had harsh words in his order, noting the trouble Samsung has faced in the past:

“Samsung’s auto-delete email function is no stranger to the federal courts. Over seven years ago, in Mosaid v. Samsung, the District of New Jersey addressed the “rolling basis” by which Samsung email was deleted or otherwise rendered inaccessible. Mosaid also addressed Samsung’s decision not to flip an “off-switch” even after litigation began. After concluding that Samsung’s practices resulted in the destruction of relevant emails, and that “common sense dictates that [Samsung] was more likely to have been threatened by that evidence,” Mosaid affirmed the imposition of both an adverse inference and monetary sanctions.

Rather than building itself an off-switch—and using it—in future litigation such as this one, Samsung appears to have adopted the alternative approach of “mend it don’t end it.” As explained below, however, Samsung’s mend, especially during the critical seven months after a reasonable party in the same circumstances would have reasonably foreseen this suit, fell short of what it needed to do”.

The trial starts today and while no one yet knows how the jury will rule, Judge Grewal’s instructions to the jury regarding the adverse inference certainly won’t help Samsung’s case:

“Samsung has failed to prevent the destruction of relevant evidence for Apple’s use in this litigation. This is known as the “spoliation of evidence.

I instruct you, as a matter of law, that Samsung failed to preserve evidence after its duty to preserve arose. This failure resulted from its failure to perform its discovery obligations.

You also may presume that Apple has met its burden of proving the following two elements by a preponderance of the evidence: first, that relevant evidence was destroyed after the duty to preserve arose. Evidence is relevant if it would have clarified a fact at issue in the trial and otherwise would naturally have been introduced into evidence; and second, the lost evidence was favorable to Apple.

Whether this finding is important to you in reaching a verdict in this case is for you to decide. You may choose to find it determinative, somewhat determinative, or not at all determinative in reaching your verdict.”

Here are some other cases with adverse inference sanctions previously covered by the blog, including this one, this one, this one and this one.

So, what do you think? Will the “adverse inference” order decide this case? Please share any comments you might have or if you’d like to know more about a particular topic.

Disclaimer: The views represented herein are exclusively the views of the author, and do not necessarily represent the views held by CloudNine Discovery. eDiscoveryDaily is made available by CloudNine Discovery solely for educational purposes to provide general information about general eDiscovery principles and not to provide specific legal advice applicable to any particular circumstance. eDiscoveryDaily should not be used as a substitute for competent legal advice from a lawyer you have retained and who has agreed to represent you.