eDiscovery Case Law: Defendant Responds to Plaintiffs’ Motion for Recusal in Da Silva Moore

Geez, you take a week or so to cover some different topics and a few things happen in the most talked about eDiscovery case of the year. Time to catch up! Today, we’ll talk about the response of the defendant MSLGroup Americas to the plaintiffs’ motion for recusal in the Da Silva Moore case. Tomorrow, we will discuss the plaintiffs’ latest objection – to Magistrate Judge Andrew J. Peck's rejection of their request to stay discovery pending the resolution of outstanding motions and objections. But, first, a quick recap.

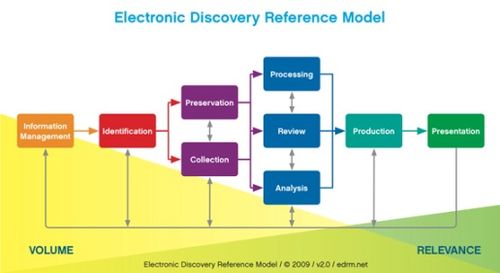

Several weeks ago, in Da Silva Moore v. Publicis Groupe & MSL Group, No. 11 Civ. 1279 (ALC) (AJP) (S.D.N.Y. Feb. 24, 2012), Judge Peck of the U.S. District Court for the Southern District of New York issued an opinion making it likely the first case to accept the use of computer-assisted review of electronically stored information (“ESI”) for this case. However, on March 13, District Court Judge Andrew L. Carter, Jr. granted plaintiffs’ request to submit additional briefing on their February 22 objections to the ruling. In that briefing (filed on March 26), the plaintiffs claimed that the protocol approved for predictive coding “risks failing to capture a staggering 65% of the relevant documents in this case” and questioned Judge Peck’s relationship with defense counsel and with the selected vendor for the case, Recommind.

Then, on April 5, Judge Peck issued an order in response to Plaintiffs’ letter requesting his recusal, directing plaintiffs to indicate whether they would file a formal motion for recusal or ask the Court to consider the letter as the motion. On April 13, (Friday the 13th, that is), the plaintiffs did just that, by formally requesting the recusal of Judge Peck. But, on April 25, Judge Carter issued an opinion and order in the case, upholding Judge Peck’s opinion approving computer-assisted review.

As for the motion for recusal, that’s still pending. On Monday, April 30, the defendant filed a response (not surprisingly) opposing the motion for recusal. In its Memorandum of Law in Opposition to Plaintiffs’ Motion for Recusal or Disqualification, the defendants noted the following:

- Plaintiffs Agreed to the Use of Predictive Coding: Among the arguments here, the defendants noted that, after prior discussions regarding predictive coding, on January 3, the “[p]laintiffs submitted to the Court their proposed version of the ESI Protocol, which relied on the use of predictive coding. Similarly, during the January 4, 2012 conference itself, Plaintiffs, through their e-discovery vendor, DOAR, confirmed not only that Plaintiffs had agreed to the use of predictive coding, but also that Plaintiffs agreed with some of the details of the search methodology, including the “confidence levels” proposed by MSL.”

- It Was Well Known that Judge Peck Was a Leader In eDiscovery Before The Case Was Assigned to Him: The defendants referenced, among other things, that Judge Peck’s October 2011 article, Search, Forward discussed “computer-assisted coding,” and that Judge Peck stated in the article: “Until there is a judicial decision approving (or even critiquing) the use of predictive coding, counsel will just have to rely on this article as a sign of judicial approval.”

- Ralph Losey Had No Ex Parte Contact with Judge Peck: The defendants noted that their expert, Ralph Losey, “has never discussed this case with Judge Peck” and that his “mere appearance” at seminars and conferences “does not warrant disqualification of all judges who also appear.”

As a result, the defendants argued that the court should deny plaintiffs motion for recusal because:

- Judge Peck’s “Well-Known Expertise in and Ongoing Discourse on the Topic of Predictive Coding Are Not Grounds for His Disqualification”;

- His “Professional Relationship with Ralph Losey Does Not Mandate Disqualification”;

- His “Comments, Both In and Out of the Courtroom, Do Not Warrant Recusal”; and

- His “Citation to Articles in his February 24, 2012 Opinion Was Proper”.

For details on these arguments, click the link to the Memorandum above. Judge Carter has yet to rule on the motion for recusal.

So, what do you think? Did the defendants make an effective argument or should Judge Peck be recused? Please share any comments you might have or if you’d like to know more about a particular topic.

Disclaimer: The views represented herein are exclusively the views of the author, and do not necessarily represent the views held by CloudNine Discovery. eDiscoveryDaily is made available by CloudNine Discovery solely for educational purposes to provide general information about general eDiscovery principles and not to provide specific legal advice applicable to any particular circumstance. eDiscoveryDaily should not be used as a substitute for competent legal advice from a lawyer you have retained and who has agreed to represent you.