eDiscovery Trends: Jim McGann of Index Engines

This is the third of the LegalTech New York (LTNY) Thought Leader Interview series. eDiscoveryDaily interviewed several thought leaders at LTNY this year and asked each of them the same three questions:

- What do you consider to be the current significant trends in eDiscovery on which people in the industry are, or should be, focused?

- Which of those trends are evident here at LTNY, which are not being talked about enough, and/or what are your general observations about LTNY this year?

- What are you working on that you’d like our readers to know about?

Today’s thought leader is Jim McGann. Jim is Vice President of Information Discovery at Index Engines. Jim has extensive experience with the eDiscovery and Information Management in the Fortune 2000 sector. He has worked for leading software firms, including Information Builders and the French-based engineering software provider Dassault Systemes. In recent years he has worked for technology-based start-ups that provided financial services and information management solutions.

What do you consider to be the current significant trends in eDiscovery on which people in the industry are, or should be, focused?

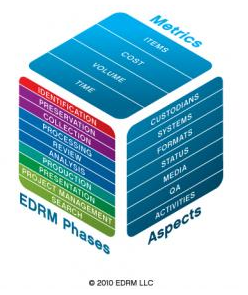

What we’re seeing is that companies are becoming a bit more proactive. Over the past few years we’ve seen companies that have simply been reacting to litigation and it’s been a very painful process because ESI collection has been a “fire drill” – a very last minute operation. Not because lawyers have waited and waited, but because the data collection process has been slow, complex and overly expensive. But things are changing. Companies are seeing that eDiscovery is here to stay, ESI collection is not going away and the argument of saying that it’s too complex or expensive for us to collect is not holding water. So, companies are starting to take a proactive stance on ESI collection and understanding their data assets proactively. We’re talking to companies that are not specifically responding to litigation; instead, they’re building a defensible policy that they can apply to their data sources and make data available on demand as needed.

Which of those trends are evident here at LTNY, which are not being talked about enough, and/or what are your general observations about LTNY this year?

{Interviewed on the first morning of LTNY} Well, in walking the floor as people were setting up, you saw a lot of early case assessment last year; this year you’re seeing a lot of information governance.. That’s showing that eDiscovery is really rolling into the records management/information governance area. On the CIO and General Counsel level, information governance is getting a lot of exposure and there’s a lot of technology that can solve the problems. Litigation support’s role will be to help the executives understand the available technology and how it applies to information governance and records management initiatives. You’ll see more information governance messaging, which is really a higher level records management message.

As for other trends, one that I’ll tie Index Engines into is ESI collection and pricing. Per GB pricing is going down as the volume of data is going up. Years ago, prices were a thousand per GB, then hundreds of dollars per GB, etc. Now the cost is close to tens of dollars per GB. To really manage large volumes of data more cost-effectively, the collection price had to become more affordable. Because Index Engines can make data on backup tapes searchable very cost-effectively, for as little as $50 per tape, data on tape has become as easy to access and search as online data. Perhaps even easier because it’s not on a live network. Backup tapes have a bad reputation because people think of them as complex or expensive, but if you take away the complexity and expense (which is what Index Engines has done), then they really become “full point-in-time” snapshots. So, if you have litigation from a specific date range, you can request that data snapshot (which is a tape) and perform discovery on it. Tape is really a natural litigation hold when you think about it, and there is no need to perform the hold retroactively.

So, what does the ease of which the information can be indexed from tape do to address the inaccessible argument for tape retrieval? That argument has been eroding over the years, thanks to technology like ours. And, you see decisions from judges like Judge Scheindlin saying “if you cannot find data in your primary network, go to your backup tapes”, indicating that they consider backup tapes in the next source right after online networks. You also see people like Craig Ball writing that backup tapes may be the most convenient and cost-effective way to get access to data. If you had a choice between doing a “server crawl” in a corporate environment or just asking for a backup tape of that time frame, tape is the much more convenient and less disruptive option. So, if your opponent goes to the judge and says it’s going to take millions of dollars to get the information off of twenty tapes, you must know enough to be in front of a judge and say “that’s not accurate”. Those are old numbers. There are court cases where parties have been instructed to use tapes as a cost-effective means of getting to the data. Technology removes the inaccessible argument by making it easier, faster and cheaper to retrieve data from backup tapes.

The erosion of the accessibility burden is sparking the information governance initiatives. We’re seeing companies come to us for legacy data remediation or management projects, basically getting rid of old tapes. They are saying “if I’ve got ten years of backup tapes sitting in offsite storage, I need to manage that proactively and address any liability that’s there” (that they may not even be aware exists). These projects reflect a proactive focus towards information governance by remediating those tapes and getting rid of data they don’t need. Ninety-eight percent of the data on old tapes is not going to be relevant to any case. The remaining two percent can be found and put into the company’s litigation hold system, and then they can get rid of the tapes.

How do incremental backups play into that? Tapes are very incremental and repetitive. If you’re backing up the same data over and over again, you may have 50+ copies of the same email. Index Engines technology automatically gets rid of system files and applies a standard MD5Hash to dedupe. Also, by using tape cataloguing, you can read the header and say “we have a Saturday full backup and five incremental during the week, then another Saturday full backup”. You can ignore the incremental tapes and just go after the full backups. That’s a significant percent of the tapes you can ignore.

What are you working on that you’d like our readers to know about?

Index Engines just announced today a partnership with LeClairRyan. This partnership combines legal expertise for data retention with the technology that makes applying the policy to legacy data possible. For companies that want to build policy for the retention of legacy data and implement the tape remediation process we have advisors like LeClairRyan that can provide legacy data consultation and oversight. By proactively managing the potential liability of legacy data, you are also saving the IT costs to explore that data.

Index Engines also just announced a new cloud-based tape load service that will provide full identification, search and access to tape data for eDiscovery. The Look & Learn service, starting at $50 per tape, will provide clients with full access to the index of their tape data without the need to install any hardware or software. Customers will be able to search the index and gather knowledge about content, custodians, email and metadata all via cloud access to the Index Engines interface, making discovery of data from tapes even more convenient and affordable.

Thanks, Jim, for participating in the interview!

And to the readers, as always, please share any comments you might have or if you’d like to know more about a particular topic!