Thought Leader Q&A: Alon Israely of BIA

Tell me about your company and the products you represent. BIA is a full solution E-Discovery provider. Our core competencies are around E-Discovery Collections and Processing, but we offer the full spectrum of services around E-Discovery. For almost a decade, BIA has been developing and implementing defensible, technology driven solutions that reduce the costs and risks related to litigation, regulatory compliance and internal audits. BIA provides software and services to Fortune 1000, Global 2000 companies and Am Law 100 law firms. We are headquartered in New York City, and have offices in San Francisco, Seattle, Washington DC and in Southwest Michigan. We also maintain digital evidence response units throughout the United States, Europe, Asia, and the Middle East.

BIA’s products are defensible and cost effective, offering defensible remote collections with DiscoveryBOT™, fast e-discovery processing with our TD Grid system and automated and secure legal hold software with Solis™. For more about BIA’s product, click here.

What is the best way for lawyers and litigation support professionals to take control of their eDiscovery? The best way for litigation support professionals to take control of their e-discovery is to scope projects correctly. It is important to understand that not one size fits all in e-discovery. That is, there are many tools and service providers out there – it is important to focus (at the beginning) on what needs to be accomplished from a legal and IT perspective first and then to determine which technologies and methods fit that strategy best.

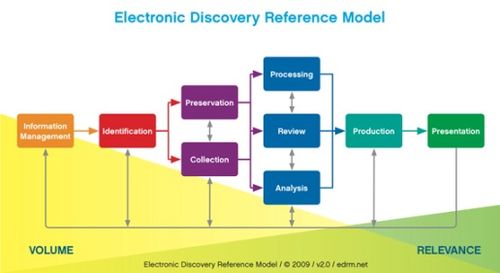

What is a good way to achieve predictability in eDiscovery costs? Most of the cost analysis that exists in e-discovery today is focused on the Review side, where the data has already been collected and perhaps culled. Yet, there are still too many documents, where most of the documents are not responsive. With a focus on the left side of the EDRM, e-discovery costs are visible early on in the process. For example, using a good (light-touch) collection tool and method to lock data down is one of the best ways to control e-discovery costs – that is, doing the right collection early-on and getting the right metrics from those collections, allow you to analyze that data (even at a high-level without incurring processing and other costs) which can then help can help the attorneys and the institutional client determine costs early in the process, and in a more predictable manner.

Is there a way to perform self collection in a defensible manner? Yes. Use the right tools and methods and importantly, have those tools and methods vetted (reviewed and approved) by e-discovery collection professionals. Defensible self-collections do NOT mean that the custodian or the IT people are left to perform the collection on their own without the right plan behind them. There are best-practices that should be followed and there are some tools that maintain the integrity of the data. Make sure that those best practices and tools are used (having been scoped correctly – see response above) by professionals or at least used by staff and peer-reviewed or monitored by professionals. Also, rely on custodians for good ESI identification – that is, the custodians (users) usually know better than anyone where they maintain records – so, using custodian questionnaires early-on will help inform those systems which will be most relevant – which goes to diligence (an important factor in defensible collections). Also then the professional can work in tandem with the custodian to gather the data in a manner which will ensure the evidentiary integrity of the data. At BIA we have been following those methods for years and have been very successful with our clients, the Courts and Opposing parties, at defending those ways of identifying and collecting ESI.

What is the importance of the left side of the EDRM model? The left side is where it all starts with e-discovery – that is, ESI collections are usually the most affordable parts of the overall e-discovery process and are arguably the most important – that is, “garbage in/garbage-out.” Because the subsequent parts of the e-discovery process (i.e., the “right-side of the EDRM”) rely on the data identified and gathered in the early parts of the process, it is imperative that those tasks and activities performed for the “left side of EDRM” are done in the correct manner – that is, maintaining the evidentiary integrity of the data collected. Also, the left side of the EDRM includes preserving data and notifying custodians of their obligations to preserve – which is a piece critical to defensible e-discovery – especially in light of Pension Committee and some other recent cases. As for the money piece, the left side of the EDRM is an area where much of the planning can occur for the rest of the process without incurring substantial costs – that planning goes a long way to ascertaining the real costs and timing with respect to the remainder of the e-discovery process.

About Alon Israely

Alon Israely has over fifteen years of experience in a variety of advanced computing-related technologies. Alon is a Senior Advisor in BIA’s Advisory Services group and currently oversees BIA’s product development for its core technology products. Prior to BIA, Alon consulted with law firms and their clients on a variety of technology issues, including expert witness services related to computer forensics, digital evidence management and data security. Prior to that, he was a senior member of several IT teams working on projects for Fortune 500 companies related to global network architecture and data migrations projects for enterprise information systems. As a pioneer in the field of digital evidence collection and handling, Alon has worked on a wide variety of matters, including several notable financial fraud cases; large-scale multi-party international lawsuits; and corporate matters involving the SEC, FTC, and international regulatory boards. Alon holds a B.A. from UCLA and received his J.D. from New York Law School with an emphasis in Telecommunications Law. He is a member of the New York State Bar as well as several legal and computer forensic associations.