eDiscovery Privilege Review in the Trump-Cohen-Daniels Saga: eDiscovery Trends

This is not a political blog and we try not to represent any political beliefs on this blog. But, sometimes there is an eDiscovery component to the political story and it’s interesting to cover that component. This is one of those times.

According to Bloomberg (Cohen Prosecutors Accept Neutral Review, Using Trump’s Words, written by Bob Van Voris and David Voreacos), prosecutors probing President Donald Trump’s lawyer said they are prepared to use a neutral outsider to review documents seized this month from the home and office of Michael Cohen, which was an about-face from the government’s initial plan to scrutinize the documents itself.

In a five-page letter to the judge last Thursday, prosecutors, referencing the president’s statement that Cohen was responsible for only “a tiny, tiny little fraction” of his legal work, argued that the special master’s document review could move swiftly. Along with Cohen’s earlier acknowledgment that he had just three legal clients this year, that may have undermined the lawyer’s claim that the seized records may contain “thousands, if not millions” of privileged communications from clients, said former federal prosecutor Renato Mariotti.

In their filing, prosecutors said they now recommend a special master process proposed by retired U.S. Magistrate Judge Frank Maas (a United States Magistrate Judge for the Southern District of New York for 17 years and a frequent speaker at various conferences about eDiscovery trends and best practices) to weed out records that might be covered by the attorney-client privilege. They had previously asked that a separate team of prosecutors be permitted to review the documents first — a procedure routinely employed in other cases involving such materials.

“We believe that using Judge Maas or another neutral retired former Magistrate Judge familiar with this electronic discovery process and with experience in ruling on issues of privilege will lead to an expeditious and fair review of the materials obtained through the judicially authorized search warrants,” prosecutors said in a letter filed shortly before a court hearing scheduled for noon last Thursday.

In a separate letter filed with the court, Maas said that as special master he could analyze potential privileged materials through one of two methods. One would involve his review of a so-called privilege log, which would list all materials that any party says might be protected, as both Trump and Cohen have urged. The other would involve Maas directly reviewing the seized material himself to determine what may be privileged.

He preferred his own review, saying “privilege logs often are virtually useless as a tool to assist a judge or master, and their preparation is expensive and can cause delay.”

Prosecutors have said their probe is focused more on Cohen’s personal business and financial dealings than his legal work. They have seized documents relating to a 2016 payment made by a company Cohen set up to adult film actress Stormy Daniels, who claims to have had a tryst with Trump in 2006.

It will be interesting to see what happens from here. And, of course, I’m talking about from an eDiscovery perspective, of course! :o)

So, what do you think? Should special master review to determine privilege be based on the documents themselves or should it be based on review of privilege logs? As always, please share any comments you might have or if you’d like to know more about a particular topic.

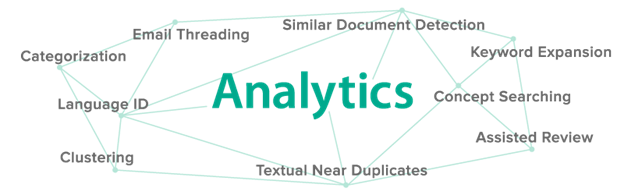

Sponsor: This blog is sponsored by CloudNine, which is a data and legal discovery technology company with proven expertise in simplifying and automating the discovery of data for audits, investigations, and litigation. Used by legal and business customers worldwide including more than 50 of the top 250 Am Law firms and many of the world’s leading corporations, CloudNine’s eDiscovery automation software and services help customers gain insight and intelligence on electronic data.

Disclaimer: The views represented herein are exclusively the views of the author, and do not necessarily represent the views held by CloudNine. eDiscovery Daily is made available by CloudNine solely for educational purposes to provide general information about general eDiscovery principles and not to provide specific legal advice applicable to any particular circumstance. eDiscovery Daily should not be used as a substitute for competent legal advice from a lawyer you have retained and who has agreed to represent you.