How Big is Your ESI Collection, Really? – eDiscovery Best Practices

When I was at ILTA last week, this topic came up in a discussion with a colleague during the show, so I thought it would be good to revisit here.

After identifying custodians relevant to the case and collecting files from each, you’ve collected roughly 100 gigabytes (GB) of Microsoft Outlook email PST files and loose electronic files from the custodians. You identify a vendor to process the files to load into a review tool, so that you can perform review and produce the files to opposing counsel. After processing, the vendor sends you a bill – and they’ve charged you to process over 200 GB!! Are they trying to overbill you?

Yes and no.

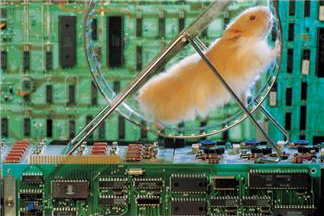

Many of the files in most ESI collections are stored in what are known as “archive” or “container” files. For example, while Outlook emails can be stored in different file formats, they are typically collected from each custodian and saved in a personal storage (.PST) file format, which is an expanding container file. The scanned size for the PST file is the size of the file on disk.

Did you ever see one of those vacuum bags that you store clothes in and then suck all the air out so that the clothes won’t take as much space? The PST file is like one of those vacuum bags – it often stores the emails and attachments in a compressed format to save space. There are other types of archive container files that compress the contents – .ZIP and .RAR files are two examples of compressed container files. These files are often used to not only to compress files for storage on hard drives, but they are also used to compact or group a set of files when transmitting them, often in email. With email comprising a major portion of most ESI collections and the popularity of other archive container files for compressing file collections, the expanded size of your collection may be considerably larger than it appears when stored on disk.

When PST, ZIP, RAR or other compressed file formats are processed for loading into a review tool, they are expanded into their normal size. This expanded size can be 1.5 to 2 times larger than the scanned size (or more). And, that’s what some vendors will bill processing on – the expanded size. In those cases, you won’t know what the processing costs will be until the data is expanded since it’s difficult to determine until processing is complete.

It’s important to be prepared for that and know your options when processing that data. Make sure your vendor selection criteria includes questions about how processing is billed, on the scanned or expanded size. Some vendors (like the company I work for, CloudNine Discovery), do bill based on the scanned size of the collection for processing, so shop around to make sure you’re getting the best deal from your vendor.

So, what do you think? Have you ever been surprised by processing costs of your ESI? Please share any comments you might have or if you’d like to know more about a particular topic.

Disclaimer: The views represented herein are exclusively the views of the author, and do not necessarily represent the views held by CloudNine Discovery. eDiscoveryDaily is made available by CloudNine Discovery solely for educational purposes to provide general information about general eDiscovery principles and not to provide specific legal advice applicable to any particular circumstance. eDiscoveryDaily should not be used as a substitute for competent legal advice from a lawyer you have retained and who has agreed to represent you.