eDiscovery Rewind: Eleven for 11-11-11

Since today is one of only 12 days this century where the month, day and year are the same two-digit numbers (not to mention the biggest day for “craps” players to hit Las Vegas since July 7, 2007!), it seems an appropriate time to look back at some of our recent topics. So, in case you missed them, here are eleven of our recent posts that cover topics that hopefully make eDiscovery less of a “gamble” for you!

eDiscovery Best Practices: Testing Your Search Using Sampling: On April 1, we talked about how to determine an appropriate sample size to test your search results as well as the items NOT retrieved by the search, using a site that provides a sample size calculator. On April 4, we talked about how to make sure the sample set is randomly selected. In this post, we’ll walk through an example of how you can test and refine a search using sampling.

eDiscovery Best Practices: Your ESI Collection May Be Larger Than You Think: Here’s a sample scenario: You identify custodians relevant to the case and collect files from each. Roughly 100 gigabytes (GB) of Microsoft Outlook email PST files and loose “efiles” is collected in total from the custodians. You identify a vendor to process the files to load into a review tool, so that you can perform first pass review and, eventually, linear review and produce the files to opposing counsel. After processing, the vendor sends you a bill – and they’ve charged you to process over 200 GB!! What happened?!?

eDiscovery Trends: Why Predictive Coding is a Hot Topic: Last month, we considered a recent article about the use of predictive coding in litigation by Judge Andrew Peck, United States magistrate judge for the Southern District of New York. The piece has prompted a lot of discussion in the profession. While most of the analysis centered on how much lawyers can rely on predictive coding technology in litigation, there were some deeper musings as well.

eDiscovery Best Practices: Does Anybody Really Know What Time It Is?: Does anybody really know what time it is? Does anybody really care? OK, it’s an old song by Chicago (back then, they were known as the Chicago Transit Authority). But, the question of what time it really is has a significant effect on how eDiscovery is handled.

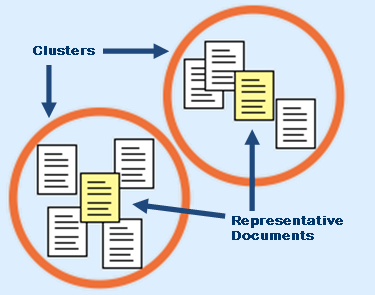

eDiscovery Best Practices: Message Thread Review Saves Costs and Improves Consistency: Insanity is doing the same thing over and over again and expecting a different result. But, in ESI review, it can be even worse when you get a different result. Most email messages are part of a larger discussion, which could be just between two parties, or include a number of parties in the discussion. To review each email in the discussion thread would result in much of the same information being reviewed over and over again. Instead, message thread analysis pulls those messages together and enables them to be reviewed as an entire discussion.

eDiscovery Best Practices: When Collecting, Image is Not Always Everything: There was a commercial in the early 1990s for Canon cameras in which tennis player Andre Agassi uttered the quote that would haunt him for most of his early career – “Image is everything.” When it comes to eDiscovery preservation and collection, there are times when “Image is everything”, as in a forensic “image” of the media is necessary to preserve all potentially responsive ESI. However, forensic imaging of media is usually not necessary for Discovery purposes.

eDiscovery Trends: If You Use Auto-Delete, Know When to Turn It Off: Federal Rule of Civil Procedure 37(f), adopted in 2006, is known as the “safe harbor” rule. While it’s not always clear to what extent “safe harbor” protection extends, one case from a few years ago, Disability Rights Council of Greater Washington v. Washington Metrop. Trans. Auth., D.D.C. June 2007, seemed to indicate where it does NOT extend – auto-deletion of emails.

eDiscovery Best Practices: Checking for Malware is the First Step to eDiscovery Processing: A little over a month ago, I noted that we hadn’t missed a (business) day yet in publishing a post for the blog. That streak almost came to an end back in May. As I often do in the early mornings before getting ready for work, I spent some time searching for articles to read and identifying potential blog topics and found a link on a site related to “New Federal Rules”. Curious, I clicked on it and…up popped a pop-up window from our virus checking software (AVG Anti-Virus, or so I thought) that the site had found a file containing a “trojan horse” program. The odd thing about the pop-up window is that there was no “Fix” button to fix the trojan horse. So, I chose the best available option to move it to the vault. Then, all hell broke loose.

eDiscovery Trends: An Insufficient Password Will Thwart Even The Most Secure Site: Several months ago, we talked about how most litigators have come to accept that Software-as-a-Service (SaaS) systems are secure. However, according to a recent study by the Ponemon Institute, the chance of any business being hacked in the next 12 months is a “statistical certainty”. No matter how secure a system is, whether it’s local to your office or stored in the “cloud”, an insufficient password that can be easily guessed can allow hackers to get in and steal your data.

eDiscovery Trends: Social Media Lessons Learned Through Football: The NFL Football season began back in September with the kick-off game pitting the last two Super Bowl winners – the New Orleans Saints and the Green Bay Packers – against each other to start the season. An incident associated with my team – the Houston Texans – recently illustrated the issues associated with employees’ use of social media sites, which are being faced by every organization these days and can have eDiscovery impact as social media content has been ruled discoverable in many cases across the country.

eDiscovery Strategy: "Command" Model of eDiscovery Must Make Way for Collaboration: In her article "E-Discovery 'Command' Culture Must Collapse" (via Law Technology News), Monica Bay discusses the old “command” style of eDiscovery, with a senior partner leading his “troops” like General George Patton – a model that summit speakers agree is "doomed to failure" – and reports on the findings put forward by judges and litigators that the time has come for true collaboration.

So, what do you think? Did you learn something from one of these topics? If so, which one? Please share any comments you might have or if you’d like to know more about a particular topic.

eDiscoveryDaily would like to thank all veterans and the men and women serving in our armed forces for the sacrifices you make for our country. Thanks to all of you and your families and have a happy and safe Veterans Day!