Just a Reminder to Think Before You Hit Send – eDiscovery Best Practices

With Anthony Weiner’s announcement that he is attempting a political comeback by running for mayor on New York City, it’s worth remembering the “Twittergate” story that ultimately cost his congressional seat in the first place – not to bash him, but to remind all of us how important it is to think before you hit send (even if he did start his campaign by using a picture of Pittsburgh’s skyline instead of NYC’s — oops!). Here is another reminder of that fact.

Chili’s Waitress Fired Over Facebook Post Insulting ‘Stupid Cops’

As noted on jobs.aol.com, a waitress at an Oklahoma City Chili’s posted a photo of three Oklahoma County Sheriff’s deputies on her Facebook page along with the comment: “Stupid Cops better hope I’m not their server FDP.” (A handy abbreviation for F*** Da Police.)

The woman, Ashley Warden, might have had reason to hold a grudge against her local police force. Last year she made national news when her potty-training toddler pulled down his pants in his grandmother’s front yard, and a passing officer handed Warden a public urination ticket for $2,500. (The police chief later apologized and dropped the charges, while the ticketing officer was fired.)

Nonetheless, Warden’s Facebook post quickly went viral on law enforcement sites and Chili’s was barraged with calls demanding that she be fired. Chili’s agreed. “With the changing world of digital and social media, Chili’s has Social Media Guidelines in place, asking our team members to always be respectful of our guests and to use proper judgement when discussing actions in the work place …,” the restaurant chain said in a statement. “After looking into the matter, we have taken action to prevent this from happening again.”

Best Practices and Social Media Guidelines

Another post on jobs.aol.com discusses some additional examples of people losing their jobs for Facebook posts, along with six tips for making posts that should keep you from getting fired, by making sure the posts would be protected by the National Labor Relations Board (NLRB), which is the federal agency tasked with protecting employees’ rights to association and union representation.

Perhaps so, though, as the article notes, the NLRB “has struggled to define how these rights apply to the virtual realm”. It’s worth noting that, in their statement, Chili’s referred to violation of their social media guidelines as a reason for the termination. As we discussed on this blog some time ago, having a social governance policy in place is a good idea to govern use of outside email, chat and social media that covers what employees should and should not do (and the post identified several factors that such a policy should address).

Thinking before you hit send in these days of pervasive social media means, among other things, being familiar with your organization’s social media policies and ensuring compliance with those policies. If you’re going to post anything related to your job, that’s important to keep in mind. To think before you hit send also involves educating yourself as to what you should and should not do when posting to social media sites.

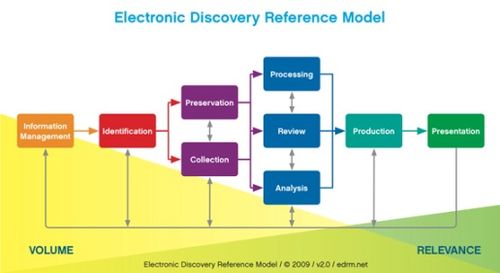

Of course it’s also important to remember that social media factors into discovery more than ever these days, as these four cases (just from the first few months of this year) illustrate.

So, what do you think? Does your organization have social media guidelines? Please share any comments you might have or if you’d like to know more about a particular topic.

eDiscovery Daily will return after the Memorial Day Holiday.

Disclaimer: The views represented herein are exclusively the views of the author, and do not necessarily represent the views held by CloudNine Discovery. eDiscoveryDaily is made available by CloudNine Discovery solely for educational purposes to provide general information about general eDiscovery principles and not to provide specific legal advice applicable to any particular circumstance. eDiscoveryDaily should not be used as a substitute for competent legal advice from a lawyer you have retained and who has agreed to represent you.