200,000 Visits on eDiscovery Daily! – eDiscovery Milestones

While we may be “just a bit behind” Google in popularity (900 million visits per month), we’re proud to announce that yesterday eDiscoveryDaily reached the 200,000 visit milestone! It took us a little over 21 months to reach 100,000 visits and just over 11 months to get to 200,000 (don’t tell my boss, he’ll expect 300,000 in 5 1/2 months). When we reach key milestones, we like to take a look back at some of the recent stories we’ve covered, so here are some recent eDiscovery items of interest.

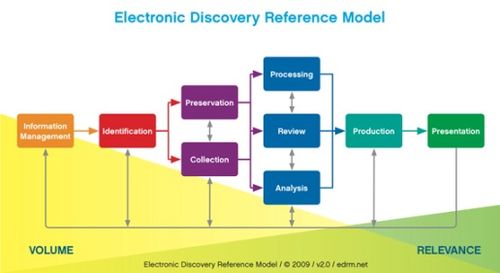

EDRM Data Set “Controversy”: Including last Friday, we have covered the discussion related to the presence of personally-identifiable information (PII) data (including social security numbers, credit card numbers, dates of birth, home addresses and phone numbers) within the Electronic Discovery Reference Model (EDRM) Enron Data Set and the “controversy” regarding the effort to clean it up (additional posts here and here).

Minnesota Implements Changes to eDiscovery Rules: States continue to be busy with changes to eDiscovery rules. One such state is Minnesota, which has amending its rules to emphasize proportionality, collaboration, and informality in the discovery process.

Changes to Federal eDiscovery Rules Could Be Coming Within a Year: Another major set of amendments to the discovery provisions of the Federal Rules of Civil Procedure is getting closer and could be adopted within the year. The United States Courts’ Advisory Committee on Civil Rules voted in April to send a slate of proposed amendments up the rulemaking chain, to its Standing Committee on Rules of Practice and Procedure, with a recommendation that the proposals be approved for publication and public comment later this year.

I Tell Ya, Information Governance Gets No Respect: A new report from 451 Research has indicated that “although lawyers are bullish about the prospects of information governance to reduce litigation risks, executives, and staff of small and midsize businesses, are bearish and ‘may not be placing a high priority’ on the legal and regulatory needs for litigation or government investigation.”

Is it Time to Ditch the Per Hour Model for Document Review?: Some of the recent stories involving alleged overbilling by law firms for legal work – much of it for document review – begs the question whether it’s time to ditch the per hour model for document review in place of a per document rate for review?

Fulbright’s Litigation Trends Survey Shows Increased Litigation, Mobile Device Collection: According to Fulbright’s 9th Annual Litigation Trends Survey released last month, companies in the United States and United Kingdom continue to deal with, and spend more on litigation. From an eDiscovery standpoint, the survey showed an increase in requirements to preserve and collect data from employee mobile devices, a high reliance on self-preservation to fulfill preservation obligations and a decent percentage of organizations using technology assisted review.

We also covered Craig Ball’s Eight Tips to Quash the Cost of E-Discovery (here and here) and interviewed Adam Losey, the editor of IT-Lex.org (here and here).

Jane Gennarelli has continued her terrific series on Litigation 101 for eDiscovery Tech Professionals – 32 posts so far, here is the latest.

We’ve also had 15 posts about case law, just in the last 2 months (and 214 overall!). Here is a link to our case law posts.

On behalf of everyone at CloudNine Discovery who has worked on the blog over the last 32+ months, thanks to all of you who read the blog every day! In addition, thanks to the other publications that have picked up and either linked to or republished our posts! We really appreciate the support! Now, on to 300,000!

And, as always, please share any comments you might have or if you’d like to know more about a particular topic.

Disclaimer: The views represented herein are exclusively the views of the author, and do not necessarily represent the views held by CloudNine Discovery. eDiscoveryDaily is made available by CloudNine Discovery solely for educational purposes to provide general information about general eDiscovery principles and not to provide specific legal advice applicable to any particular circumstance. eDiscoveryDaily should not be used as a substitute for competent legal advice from a lawyer you have retained and who has agreed to represent you.