Data Processing: The Key To Expanding Your LSP’s Bottom Line

Ask any legal service provider (LSP) about how they can increase their revenue and you’ll undoubtedly get a variety of answers, such as:

- Go paperless

- Use different fee structures

- Fire non paying clients

- Automate labor intensive tasks

While opportunities vary depending on a variety of factors, one consistent challenge you might come across is setting aside the time to explore any new possibilities. Freeing up time will allow your LSP to grow in new and unexpected ways and give your organization the flexibility to adapt to unforeseen demands.

All you have to do is find ways to increase your productivity and the easiest way to do this is through new technology. In fact, many law firms are already headed in this direction:

- 53% of law firms think technology will boost their revenue by allowing them to offer better services.

- 65% of law firms increased their technology investments in 2019.

- 25% of tech budgets were allocated to ‘innovative technologies.’

Where’s the best place to start? Simple. You need to streamline your data processing workflows.

Common Data Processing Challenges You Might Encounter

Not all data processing tools are designed equally. There are some that present their own unique challenges that could hinder your ability to meet your true potential.

In order to streamline and free up your LSP’s time, keep the following in mind when looking at potential data processing tools:

- Can you edit and add newly discovered fields?

- Having the flexibility to edit and add newly discovered fields will prevent your team from having to review data over and over again.

- Should every piece of data be pushed to the review platform?

- If every piece of data is pushed to the eDiscovery review platform, your LSP may have to pay higher hosting fees.

- Is the data stored in a public cloud-based environment?

- Storing data in the public cloud could cause your organization to lose control of the data and hosting costs.

- Can you filter out data with a true NIST list?

- This provides your organization insight into another level of data that doesn’t need to be reviewed, and sometimes, can be used to inflate your case.

Some companies charge $15 per GB per month to host your data in a public cloud environment. That doesn’t give you much room to work with when offering the best deal with your clients.

The alternative? Hosting your data in the private cloud allows you to have more control over hosting costs, which allows you to boost your bottom line. Having a data management tool in place will grant your LSP the data control and security you need. For even more ways to build value with CloudNine, download our eBook: 4 Ways Legal Service Providers Can Build Value and Boost Margins.

The Perks of Being an Efficient Data Processing Tool

Storing your raw data on-prem or in a private cloud lets you control your hosting costs, often dropping the cost down to less than $1 per GB per month. This allows you to reasonably mark up your hosting costs, boosting your bottom line while still providing good value for your clients.

Determining the efficiency of a data processing tool comes down to two factors:

- How you’re using the eDiscovery tool

- Your data size

The more data you have, the longer it will take to process. Setting up your data processing engine to perform top-level culls removes duplicative or irrelevant data before your even begin. This cuts down on the amount of data pushed further down the line within the workflow.

An efficient data processing tool allows for more automation, allowing you to work on other tasks and optimizing your employee’s time on the clock.

A truly efficient tool allows you to set alerts that let you or the next person in the workflow know when processing is complete. This means you don’t have to have anyone sitting there, watching and waiting for the system to complete its task.

How CloudNine Explore Can Save You Time and Money

In many cases, CloudNine Explore saves you approximately 30% of your costs associated with the interaction between your data and your team. If you are asking how that is possible, we break down 4 money-saving tips in our latest eBook.

Through our data culling functionality that removes duplicate data points, we reduce the amount of data you send to review.

Plus, other platforms make a copy of your data as you expand it, creating duplicative and irrelevant data that you have to pay to store. In CloudNine Explore, your data is only expanded after it’s culled and filtered, removing irrelevant data along the way. It’s only then that your data is copied and exported to your review platform.

By reducing this initial data size, you save hosting costs and time further down the workflow process.

- Analyze your data quicker

- Process your data more efficiently

- Speed up decision-making processes

If you’re ready to utilize eDiscovery law software to improve your data process efficiencies so you have more time and money to explore new, alternative revenue streams, let CloudNine help you. Sign up for our free demo today.

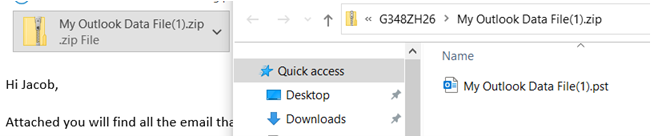

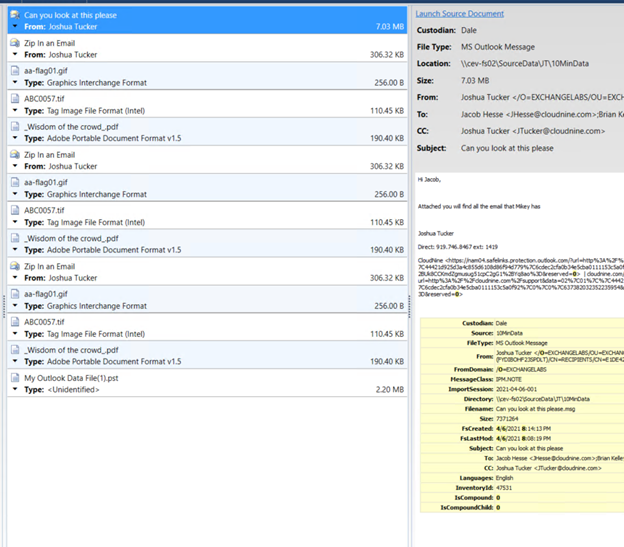

A custodian creates a PST file containing several dozen messages about a particular topic and emails it to a co-worker.

A custodian creates a PST file containing several dozen messages about a particular topic and emails it to a co-worker.