Baby, You Can Drive My CARRM – eDiscovery Trends

Full disclosure: this post is NOT about the Beatles’ song, but I liked the title.

There have been a number of terms applied to using technology to aid in eDiscovery review, including technology assisted review (often referred to by its acronym “TAR”) and predictive coding. Another term is Computer Assisted Review (which lends itself to the obvious acronym of “CAR”).

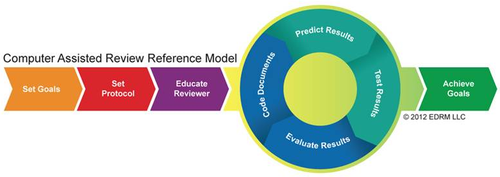

Now, the Electronic Discovery Reference Model (EDRM) is looking to provide an “owner’s manual” to that CAR with its new draft Computer Assisted Review Reference Model (CARRM), which depicts the flow for a successful CAR project. The CAR process depends on, among other things, a sound approach for identifying appropriate example documents for the collection, ensuring educated and knowledgeable reviewers to appropriately code those documents and testing and evaluating the results to confirm success. That’s why the “A” in CAR stands for “assisted” – regardless of how good the tool is, a flawed approach will yield flawed results.

As noted on the EDRM site, the major steps in the CARRM process are:

Set Goals

The process of deciding the outcome of the Computer Assisted Review process for a specific case. Some of the outcomes may be:

- reduction and culling of not-relevant documents;

- prioritization of the most substantive documents; and

- quality control of the human reviewers.

Set Protocol

The process of building the human coding rules that take into account the use of CAR technology. CAR technology must be taught about the document collection by having the human reviewers submit documents to be used as examples of a particular category, e.g. Relevant documents. Creating a coding protocol that can properly incorporate the fact pattern of the case and the training requirements of the CAR system takes place at this stage. An example of a protocol determination is to decide how to treat the coding of family documents during the CAR training process.

Educate Reviewer

The process of transferring the review protocol information to the human reviewers prior to the start of the CAR Review.

Code Documents

The process of human reviewers applying subjective coding decisions to documents in an effort to adequately train the CAR system to “understand” the boundaries of a category, e.g. Relevancy.

Predict Results

The process of the CAR system applying the information “learned” from the human reviewers and classifying a selected document corpus with pre-determined labels.

Test Results

The process of human reviewers using a validation process, typically statistical sampling, in an effort to create a meaningful metric of CAR performance. The metrics can take many forms, they may include estimates in defect counts in the classified population, or use information retrieval metrics like Precision, Recall and F1.

Evaluate Results

The process of the review team deciding if the CAR system has achieved the goals of anticipated by the review team.

Achieve Goals

The process of ending the CAR workflow and moving to the next phase in the review lifecycle, e.g. Privilege Review.

The diagram does a good job of reflecting the linear steps (Set Goals, Set Protocol, Educate Reviewer and, at the end, Achieve Goals) and a circle to represent the iterative steps (Code Documents, Predict Results, Test Results and Evaluate Results) that may need to be performed more than once to achieve the desired results. It’s a very straightforward model to represent the process. Nicely done!

Nonetheless, it’s a draft version of the model and EDRM wants your feedback. You can send your comments to mail@edrm.net or post them on the EDRM site here.

So, what do you think? Does the CARRM model make computer assisted review more straightforward? Please share any comments you might have or if you’d like to know more about a particular topic.

Disclaimer: The views represented herein are exclusively the views of the author, and do not necessarily represent the views held by CloudNine Discovery. eDiscoveryDaily is made available by CloudNine Discovery solely for educational purposes to provide general information about general eDiscovery principles and not to provide specific legal advice applicable to any particular circumstance. eDiscoveryDaily should not be used as a substitute for competent legal advice from a lawyer you have retained and who has agreed to represent you.