eDiscovery Throwback Thursdays – How Databases Were Used, Circa Early 1980s, Part 5

So far in this blog series, we’ve taken a look at the ‘litigation support culture’ in the late 1970’s and early 1980’s, we’ve covered how databases were built, and we started discussing how databases were used. We’re going to continue that in this post. But first, if you missed the earlier posts in this series, they can be found here, here, here, here, here, here, here and here.

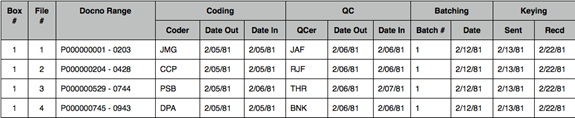

In last week’s post, we covered searching a database. As I mentioned, searches were typically done by a junior level litigation team member who was trained to use the search engine. Search results were printed on thermal paper, and that paper was flattened, folded accordion style, and given to a senior attorney to review – with the goal of identifying the documents he or she would like to see. Those printouts included information that was recorded by a coder for each document. A typical database record on a printout might look like this:

DocNo: PL00004568 – 4572

DocDate: 08/15/72

DocType: LETTER

Title: 556 Specifications

Characteristics: ANNOTATED; NO SIGNATURE

Author: Jackson-P

Author Org: ABC Inc.

Recipient: Parker-T

Recipient Org: XYZ Corp.

Copied: Franco-W; Hopkins-R

Copied Org: ABC Inc.

Mentioned: Phillips-K; Andrews-C

Subjects: A122 Widget 556; C320 Instructions

Contents: This letter incudes specifications for product 556 and requests confirmation that it meets requirements.

Source: ABC-Parker

The attorney reviewing the printout would determine (based on the coded information) which documents to review – checking those off with a pen.

The marked up printout was delivered to the archive librarian for ‘pulling’. We NEVER turned over the original (from the archive’s ‘original working copy’). Rather, an archive clerk worked with the printout, locating boxes that included checked documents, and locating the documents within those boxes. The clerk made a photocopy of each document, returned the originals to their boxes, and placed the photocopies in a second box. When the ‘document pull’ was complete, a QC clerk verified the copies against the printout to ensure nothing was missed, and then the copies were delivered to the attorney.

In last week’s post, I mentioned how long it took for a database to get built. Once the database was available for use, retrievals were slow, by today’s standards. Depending on the number of documents to be pulled, it could take days for an attorney to get a stack of documents back to review. While that would be unacceptable today, it was a huge improvement over the alternative at the time – which was to flip through an entire document collection eyeballing every page looking for documents of interest. For example, when preparing for a deposition, a team of paralegals would get to work going through boxes of documents and eyeballing every page looking for the deponent’s name.

Working with a database then was – by today’s standards – done at a snail’s pace. But the time savings at the time were significant. And the search results were usually more thorough. On one project I managed, just as the database loading was completed, an attorney called me to say he was preparing for a deposition and had his paralegals manually review the collection looking for the deponent’s name. They spent a week doing it and found under 200 documents. He was uncomfortable with those results. I told him the database was almost available – we just had to do some testing – but I could do a search for him. I did that while he waited on the phone and quickly reported back to him that the database search found almost twice as many documents. We delivered the documents to him within a couple of days.

Tune in next week and we’ll cover how the litigation world circa 1980 evolved and got to where it is today.

Please let us know if there are eDiscovery topics you’d like to see us cover in eDiscoveryDaily.

Disclaimer: The views represented herein are exclusively the views of the author, and do not necessarily represent the views held by CloudNine Discovery. eDiscoveryDaily is made available by CloudNine Discovery solely for educational purposes to provide general information about general eDiscovery principles and not to provide specific legal advice applicable to any particular circumstance. eDiscoveryDaily should not be used as a substitute for competent legal advice from a lawyer you have retained and who has agreed to represent you.