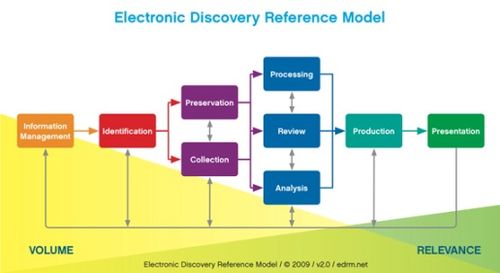

Sharon Nelson wrote a terrific post about the “controversy” regarding the Electronic Discovery Reference Model (EDRM) Enron Data Set in her Ride the Lightning blog (Is the Enron E-Mail Data Set Worth All the Mudslinging?). I wanted to repeat some of her key points here and offer some of my own perspective directly from sitting in on the Data Set team during the EDRM Annual Meeting earlier this month.

But, First a Recap

To recap, the EDRM Enron Data Set, sourced from the FERC Enron Investigation release made available by Lockheed Martin Corporation, has been a valuable resource for eDiscovery software demonstration and testing (we covered it here back in January 2011). Initially, the data was made available for download on the EDRM site, then subsequently moved to Amazon Web Services (AWS). However, after much recent discussion about personally-identifiable information (PII) data (including social security numbers, credit card numbers, dates of birth, home addresses and phone numbers) available within FERC (and consequently the EDRM Data Set), the EDRM Data Set was taken down from the AWS site.

Then, a couple of weeks ago, EDRM, along with Nuix, announced that they have republished version 1 of the EDRM Enron PST Data Set (which contains over 1.3 million items) after cleansing it of private, health and personal financial information. Nuix and EDRM have also published the methodology Nuix’s staff used to identify and remove more than 10,000 high-risk items, including credit card numbers (60 items), Social Security or other national identity numbers (572), individuals’ dates of birth (292) and other personal data. All personal data gone, right?

Not so fast.

As noted in this Law Technology News article by Sean Doherty (Enron Sandbox Stirs Up Private Data, Again), “Index Engines (IE) obtained a copy of the Nuix-cleansed Enron data for review and claims to have found many ‘social security numbers, legal documents, and other information that should not be made public.’ IE evidenced its ‘find’ by republishing a redacted version of a document with PII” (actually, a handful of them). IE and others were quite critical of the effort by Nuix/EDRM and the extent of the PII data still remaining.

As he does so well, Rob Robinson has compiled a list of articles, comments and posts related to the PII issue, here is the link.

Collaboration, not criticism

Sharon’s post had several observations regarding the data set “controversy”, some of which are repeated here:

- “Is the legal status of the data pretty clear? Yes, when a court refused to block it from being made public apparently accepting the greater good of its release, the status is pretty clear.”

- “Should Nuix be taken to task for failure to wholly cleanse the data? I don’t think so. I am not inclined to let perfect be the enemy of the good. A lot was cleansed and it may be fair to say that Nuix was surprised by how much PII remained.”

- “The terms governing the download of the data set made clear that there was no guarantee that all the PII was removed.” (more on that below in my observations)

- “While one can argue that EDRM should have done something about the PII earlier, at least it is doing something now. It may be actively helpful to Nuix to point out PII that was not cleansed so it can figure out why.”

- “Our expectations here should be that we are in the midst of a cleansing process, not looking at the data set in a black or white manner of cleansed or uncleansed.”

- “My suggestion? Collaboration, not criticism. I believe Nuix is anxious to provide the cleanest version of the data possible – to the extent that others can help, it would be a public service.”

My Perspective from the Data Set Meeting

I sat in on part of the Data Set meeting earlier this month and there was a couple of points discussed during the meeting that I thought were worth relaying:

1. We understood that there was no guarantee that all of the PII data was removed.

As with any process, we understood that there was no effective way to ensure that all PII data was removed after the process was complete and discussed needing a mechanism for people to continue to report PII data that they find. On the download page for the data set, there was a link to the legal disclaimer page, which states in section 1.8:

“While the Company endeavours to ensure that the information in the Data Set is correct and all PII is removed, the Company does not warrant the accuracy and/or completeness of the Data Set, nor that all PII has been removed from the Data Set. The Company may make changes to the Data Set at any time without notice.”

With regard to a mechanism for reporting persistent PII data, there is this statement on the Data Set page on the EDRM site:

“PII: These files may contain personally identifiable information, in spite of efforts to remove that information. If you find PII that you think should be removed, please notify us at mail@edrm.net.”

2. We agreed that any documents with PII data should be removed, not redacted.

Because the original data set, with all of the original PII data, is available via FERC, we agreed that any documents containing sensitive personal information should be removed from the data set – NOT redacted. In essence, redacting those documents is putting a beacon on them to make it easier to find them in the FERC set or downloaded copies of the original EDRM set, so the published redacted examples of missed PII only serves to facilitate finding those documents in the original sets.

Conclusion

Regardless of how effective the “cleansing” of the data set was perceived to be by some, it did result in removing over 10,000 items with personal data. Yet, some PII data evidently remains. While some people think (and they may have a point) that the data set should not have been published until after an independent audit for remaining PII data, it seems impractical (to me, at least) to wait until it is “perfect” before publishing the set. So, when is it good enough to publish? That appears to be open to interpretation.

Like Sharon, my hope is that we can move forward to continue to improve the Data Set through collaboration and that those who continue to find PII data in the set will notify EDRM, so that they can remove those items and continue to make the set better. I’d love to see the Data Set page on the EDRM site reflect a history of each data set update, with the revision date, the number of additional PII items found and removed and who identified them (to give credit to those finding the data). As Canned Heat would say, “Let’s Work Together”.

And, we haven’t even gotten to version 2 of the Data Set yet – more fun ahead! 🙂

So, what do you think? Have you used the EDRM Enron Data Set? If so, do you plan to download the new version? Please share any comments you might have or if you’d like to know more about a particular topic.

Disclaimer: The views represented herein are exclusively the views of the author, and do not necessarily represent the views held by CloudNine Discovery. eDiscoveryDaily is made available by CloudNine Discovery solely for educational purposes to provide general information about general eDiscovery principles and not to provide specific legal advice applicable to any particular circumstance. eDiscoveryDaily should not be used as a substitute for competent legal advice from a lawyer you have retained and who has agreed to represent you.