In United States ex rel. Becker v. Tools & Metals, Inc., No. 3:05-CV-0627-L (N.D. Tex. Mar. 31, 2013), a qui tam False Claims Act litigation, the plaintiffs sought, and the court awarded, costs for, among other things, uploading ESI, creating a Relativity index, and processing data over the objection that expenses should be limited to “reasonable out-of-pocket expenses which are part of the costs normally charged to a fee-paying client.” The court also approved electronic hosting costs, rejecting a defendant’s claim that “reasonableness is determined based on the number of documents used in the litigation.” However, the court refused to award costs for project management and for extracting data from hard drives where the plaintiff could have used better means to conduct a “targeted extraction of information.”

One of the defendants, Lockheed Martin, appealed the magistrate judge’s award of costs on the grounds that the recovery of expenses should be limited to “reasonable out-of-pocket expenses which are part of the costs normally charged to a fee-paying client,” as allowed under 42 U.S.C. § 1988. As part of its argument, Lockheed suggested the following:

“(1) Spencer’s request to be reimbursed for nearly $1 million in eDiscovery services is unreasonable and the magistrate’s recommendation does not cite any authority holding that a request for expenses in the amount sought by Spencer for eDiscovery is reasonable and reimbursable; (2) an award of $174,395.97 for uploading ESI and creating a search index is unfounded and arbitrary because it requires Lockheed to pay for Spencer’s decision to request ESI in a format that was different from the format that his vendor actually wanted; and (3) the recommended award punishes Lockheed for Spencer’s failure to submit detailed expense records because the actual cost of uploading and creating a search index “may have been substantially less” than the magistrate judge’s $174,395.97 estimate.” {emphasis added}

The district judge found that the “FCA does not limit recovery of expenses to those normally charged to a fee-paying client”: instead, 31 U.S.C. § 3730(d)(1)-(2) provides that “a qui tam plaintiff ‘shall . . . receive an amount for reasonable expenses which the court finds to have been necessarily incurred, plus reasonable attorneys’ fees and costs. All such expenses, fees, and costs shall be awarded against the defendant.’” The district judge agreed with the magistrate’s finding, which allowed the recovery of these expenses. Although the defendant offered an affidavit of an expert eDiscovery consultant that suggested the amount the plaintiff requested was unreasonable, the magistrate found the costs of data processing and uploading and the creation of a Relativity index permissible; however, she denied the recovery of the more than $38,000 attributable to repairing and reprocessing allegedly broken or corrupt files produced by Lockheed because Lockheed had produced the documents in the requested format. She also found that Spencer “could have and should have simply requested Lockheed to reproduce the data files at no cost rather than embarking on the expensive undertaking of repairing and reprocessing the data.”

Because the plaintiff’s “billing records did not segregate the costs for reprocessing and uploading the data and creating a searchable index,” the magistrate judge apportioned the vendor’s expenses evenly between reprocessing, uploading, and creating an index. The district court agreed and rejected Lockheed’s argument that the actual cost “may have been substantially less” as “purely speculative.”

Lockheed also complained about the magistrate judge’s award of more than $271,000 for electronic hosting costs because the plaintiff failed to show that the expenses were “reasonable and necessarily incurred” and the magistrate’s report did not cite any authority showing that this expense was recoverable. Lockheed also argued that the vendor’s bill of “$440,039 for hosting of and user access to the documents produced in the litigation is unreasonable under the circumstances because Spencer used only five of these documents during the litigation and did not notice a single deposition.” {emphasis added}

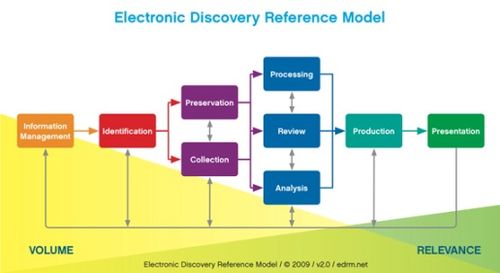

The district judge found that the data-hosting expenses were recoverable because the FCA does not limit the types of recoverable expenses. The district judge also agreed with the magistrate judge’s reduction of the hosting fees requested by nearly 40 percent—over Spencer’s objection—by limiting the time frame of recovery to the time before settlement was on the table and the number of database use accounts requested. He rejected Lockheed’s “contention that reasonableness is determined based on the number of documents used in the litigation.” He noted that in this data-intensive age, many documents collected and reviewed may not be responsive or used in the litigation; however, this “does not necessarily mean that the documents do not have to be reviewed by the parties for relevance by physically examining them or through the use of litigation software with searching capability to assist parties in identifying key documents.”

The district court also agreed with the magistrate’s decision to uphold Lockheed’s objection to the amount Spencer spent on extracting ESI from hard drives and related travel costs. The magistrate found that Spencer did not need to review everything on the hard drives; instead, he should have conducted a “targeted extraction of information” like Lockheed did or conduct depositions “to determine how best to conduct more limited discovery” to save time and expense. The magistrate deducted nearly $65,000 from Spencer’s request, awarding him $20,000. The district court opined:

“With the availability of technology and the capability of eDiscovery vendors today in this area, the court concludes that it was unreasonable for Spencer to simply image all of the hard drives without at least first considering or attempting a more targeted and focused extraction. Also, lack of familiarity with technology in this regard is not an excuse and does not relieve parties or their attorneys of their duty to ensure that the services performed and fees charged by third party vendors are reasonable, particularly when recovery of such expenses is sought in litigation. The court therefore overrules this objection.”

Finally, the district court upheld the magistrate judge’s determination that Spencer was not entitled to recover his project management costs. Spencer argued that the “IT management of the electronic database is critical, especially when poor quality electronic evidence is produced. All complex cases of this magnitude require professional IT support.” Because Spencer failed to adequately describe the services provided and because the record did not support the need for a project manager, the magistrate declined to reimburse this expense.

Ultimately, the court reduced the costs by $1,650 and the fees by $85,883, awarding the plaintiffs more than $1.6 million in fees and nearly $550,000 in costs. In closing, the district judge warned the parties that if they filed a motion for reconsideration or to amend the judgment without good cause, he would impose monetary sanctions against them.

So, what do you think? Were the right cost reimbursements awarded? Please share any comments you might have or if you’d like to know more about a particular topic.

Case Summary Source: Applied Discovery (free subscription required). For eDiscovery news and best practices, check out the Applied Discovery Blog here.

Disclaimer: The views represented herein are exclusively the views of the author, and do not necessarily represent the views held by CloudNine Discovery. eDiscoveryDaily is made available by CloudNine Discovery solely for educational purposes to provide general information about general eDiscovery principles and not to provide specific legal advice applicable to any particular circumstance. eDiscoveryDaily should not be used as a substitute for competent legal advice from a lawyer you have retained and who has agreed to represent you.