Are You Scared Yet? – eDiscovery Horrors!

Today is Halloween. Every year at this time, because (after all) we’re an eDiscovery blog, we try to “scare” you with tales of eDiscovery horrors. So, I have one question: Are you scared yet?

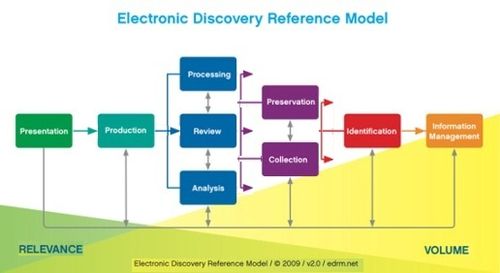

Did you know that there has been over 3.4 sextillion bytes created in the Digital Universe since the beginning of the year, and data in the world will grow nearly three times as much from 2012 to 2017? How do you handle your own growing universe of data?

What about this?

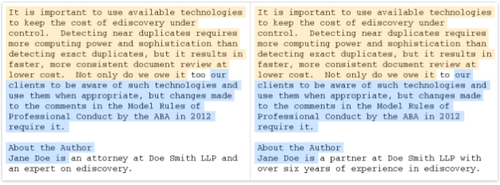

The proposed blended hourly rate was $402 for firm associates and $632 for firm partners. However, the firm asked for contract attorney hourly rates as high as $550 with a blended rate of $466.

How about this?

You’ve got an employee suing her ex-employer for discrimination, hostile work environment and being forced to resign. During discovery, it was determined that a key email was deleted due to the employer’s routine auto-delete policy, so the plaintiff filed a motion for sanctions. Sound familiar? Yep. Was her motion granted? Nope.

Or maybe this?

After identifying custodians relevant to the case and collecting files from each, you’ve collected roughly 100 gigabytes (GB) of Microsoft Outlook email PST files and loose electronic files from the custodians. You identify a vendor to process the files to load into a review tool, so that you can perform review and produce the files to opposing counsel. After processing, the vendor sends you a bill – and they’ve charged you to process over 200 GB!!

Scary, huh? If the possibility of exponential data growth, vendors holding data hostage and billable review rates of $466 per hour keep you awake at night, then the folks at eDiscovery Daily will do our best to provide useful information and best practices to enable you to relax and sleep soundly, even on Halloween!

Then again, if the expense, difficulty and risk of processing and loading up to 100 GB of data into an eDiscovery review application that you’ve never used before terrifies you, maybe you should check this out.

Of course, if you seriously want to get into the spirit of Halloween, click here. This will really terrify you!

What do you think? Is there a particular eDiscovery issue that scares you? Please share your comments and let us know if you’d like more information on a particular topic.

Happy Halloween!

Disclaimer: The views represented herein are exclusively the views of the author, and do not necessarily represent the views held by CloudNine Discovery. eDiscoveryDaily is made available by CloudNine Discovery solely for educational purposes to provide general information about general eDiscovery principles and not to provide specific legal advice applicable to any particular circumstance. eDiscoveryDaily should not be used as a substitute for competent legal advice from a lawyer you have retained and who has agreed to represent you.