As we noted yesterday, eDiscoveryDaily published 98 posts related to eDiscovery case decisions and activities over the past year, covering 62 unique cases! Yesterday, we looked back at cases related to proportionality and cooperation, privilege and inadvertent disclosures, and eDiscovery cost reimbursement. Today, let’s take a look back at cases related to social media and, of course, technology assisted review(!).

We grouped those cases into common subject themes and will review them over the next few posts. Perhaps you missed some of these? Now is your chance to catch up!

SOCIAL MEDIA

Requests for social media data in litigation continue. Unlike last year, however, not all requests for social media data were granted as some requests were deemed overbroad. However, Twitter fought “tooth and nail” (unsuccessfully, as it turns out) to avoid turning over a user’s tweets in at least one case. Here are six cases related to social media data:

Class Action Plaintiffs Required to Provide Social Media Passwords and Cell Phones. Considering proportionality and accessibility concerns in EEOC v. Original Honeybaked Ham Co. of Georgia, Colorado Magistrate Judge Michael Hegarty held that where a party had showed certain of its adversaries’ social media content and text messages were relevant, the adversaries must produce usernames and passwords for their social media accounts, usernames and passwords for e-mail accounts and blogs, and cell phones used to send or receive text messages to be examined by a forensic expert as a special master in camera.

Another Social Media Discovery Request Ruled Overbroad. As was the case in Mailhoit v. Home Depot previously, Magistrate Judge Mark R. Abel ruled in Howell v. The Buckeye Ranch that the defendant’s request (to compel the plaintiff to provide her user names and passwords for each of the social media sites she uses) was overbroad.

Twitter Turns Over Tweets in People v. Harris. As reported by Reuters, Twitter has turned over Tweets and Twitter account user information for Malcolm Harris in People v. Harris, after their motion for a stay of enforcement was denied by the Appellate Division, First Department in New York and they faced a finding of contempt for not turning over the information. Twitter surrendered an “inch-high stack of paper inside a mailing envelope” to Manhattan Criminal Court Judge Matthew Sciarrino, which will remain under seal while a request for a stay by Harris is heard in a higher court.

Home Depot’s “Extremely Broad” Request for Social Media Posts Denied. In Mailhoit v. Home Depot, Magistrate Judge Suzanne Segal ruled that the three out of four of the defendant’s discovery requests failed Federal Rule 34(b)(1)(A)’s “reasonable particularity” requirement, were, therefore, not reasonably calculated to lead to the discovery of admissible evidence and were denied.

Social Media Is No Different than eMail for Discovery Purposes. In Robinson v. Jones Lang LaSalle Americas, Inc., Oregon Magistrate Judge Paul Papak found that social media is just another form of electronically stored information (ESI), stating “I see no principled reason to articulate different standards for the discoverability of communications through email, text message, or social media platforms. I therefore fashion a single order covering all these communications.”

Plaintiff Not Compelled To Turn Over Facebook Login Information. In Davids v. Novartis Pharm. Corp., the Eastern District of New York ruled against the defendant on whether the plaintiff in her claim against a pharmaceutical company could be compelled to turn over her Facebook account’s login username and password.

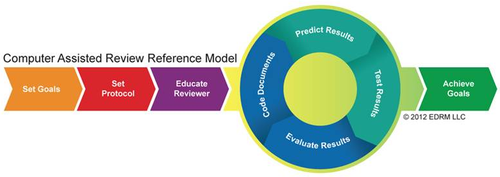

TECHNOLOGY ASSISTED REVIEW

eDiscovery vendors everywhere had been “waiting with bated breath” for the first case law pertaining to acceptance of technology assisted review within the courtroom. Not only did they get their case, they got a few others – and, in one case, the judge actually required both parties to use predictive coding. And, of course, there was a titanic battle over the use of predictive coding in the DaSilva Moore – easily the most discussed case of the year. Here are five cases where technology assisted review was at issue:

Louisiana Order Dictates That the Parties Cooperate on Technology Assisted Review. In the case In re Actos (Pioglitazone) Products Liability Litigation, a case management order applicable to pretrial proceedings in a multidistrict litigation consolidating eleven civil actions, the court issued comprehensive instructions for the use of technology-assisted review (“TAR”).

Judge Carter Refuses to Recuse Judge Peck in Da Silva Moore. This is only the final post of the year in eDiscovery Daily related to Da Silva Moore v. Publicis Groupe & MSL Group. There were at least nine others (linked within this final post) detailing New York Magistrate Judge Andrew J. Peck’s original opinion accepting computer assisted review, the plaintiff’s objections to the opinion, their subsequent attempts to have Judge Peck recused from the case (alleging bias) and, eventually, District Court Judge Andrew L. Carter’s orders upholding Judge Peck’s original opinion and refusing to recuse him in the case.

Both Sides Instructed to Use Predictive Coding or Show Cause Why Not. Vice Chancellor J. Travis Laster in Delaware Chancery Court – in EORHB, Inc., et al v. HOA Holdings, LLC, – has issued a “surprise” bench order requiring both sides to use predictive coding and to use the same vendor.

No Kleen Sweep for Technology Assisted Review. For much of the year, proponents of predictive coding and other technology assisted review (TAR) concepts have been pointing to three significant cases where the technology based approaches have either been approved or are seriously being considered. Da Silva Moore v. Publicis Groupe and Global Aerospace v. Landow Aviation are two of the cases, the third one is Kleen Products v. Packaging Corp. of America. However, in the Kleen case, the parties have now reached an agreement to drop the TAR-based approach, at least for the first request for production.

Is the Third Time the Charm for Technology Assisted Review? In Da Silva Moore v. Publicis Groupe & MSL Group, Magistrate Judge Andrew J. Peck issued an opinion making it the first case to accept the use of computer-assisted review of electronically stored information (“ESI”) for this case. Or, so we thought. Conversely, in Kleen Products LLC v. Packaging Corporation of America, et al., the plaintiffs have asked Magistrate Judge Nan Nolan to require the producing parties to employ a technology assisted review approach in their production of documents. Now, there’s a third case where the use of technology assisted review is actually being approved in an order by the judge.

Tune in tomorrow for more key cases of 2012 and one of the most common themes of the year!

So, what do you think? Did you miss any of these? Please share any comments you might have or if you’d like to know more about a particular topic.

Disclaimer: The views represented herein are exclusively the views of the author, and do not necessarily represent the views held by CloudNine Discovery. eDiscoveryDaily is made available by CloudNine Discovery solely for educational purposes to provide general information about general eDiscovery principles and not to provide specific legal advice applicable to any particular circumstance. eDiscoveryDaily should not be used as a substitute for competent legal advice from a lawyer you have retained and who has agreed to represent you.