Alon Israely, Esq., CISSP of BIA – eDiscovery Trends

This is the sixth of the 2013 LegalTech New York (LTNY) Thought Leader Interview series. eDiscoveryDaily interviewed several thought leaders at LTNY this year and generally asked each of them the following questions:

- What are your general observations about LTNY this year and how it fits into emerging trends?

- If last year’s “next big thing” was the emergence of predictive coding, what do you feel is this year’s “next big thing”?

- What are you working on that you’d like our readers to know about?

Today’s thought leader is Alon Israely. Alon is a Manager of Strategic Partnerships at Business Intelligence Associates (BIA) and currently leads the Strategic Partner Program at BIA. Alon has over seventeen years of experience in a variety of advanced computing-related technologies and has consulted with law firms and their clients on a variety of technology issues, including expert witness services related to computer forensics, digital evidence management and data security. Alon is an attorney and Certified Information Security Specialist (CISSP).

What are your general observations about LTNY this year and how it fits into emerging trends?

{Interviewed on the second afternoon} Looking at the show and walking around the exhibit hall, I feel like the show is less chaotic than in the past. It seems like there are less vendors, though I don’t know that for a fact. However, the vendors that are here appear to have accomplished quite a bit over the last twelve months to better clarify their messaging, as well as to better fine tune their offerings and the way they present those offerings. It’s actually more enjoyable for me to walk through the exhibit hall this year – last year felt so chaotic and it was really difficult to differentiate the offerings. That has been a problem in the legal technology business – no one really knows what the different vendors really do and they all seem to do the same thing. Because of better messaging, I think this is the first year I started to truly feel that I can differentiate vendor offerings, probably because some of the vendors that entered the industry in the past few years have reached a maturity level.

So, it’s not that I am not seeing new technologies, methods or ways of doing things in eDiscovery; instead, I am seeing better ways of doing things. As well as vendors simply getting better at their own pitch and messaging. And, by that, I mean everything involved in the messaging – the booth, the sales reps in the booth, the product being offered, everything.

If last year’s “next big thing” was the emergence of predictive coding, what do you feel is this year’s “next big thing”?

I think this year’s “next big thing” follows the same theme as last year’s “next big thing”, only you’re going to see more mature Technology Assisted Review (TAR) solutions and more mature predictive coding. It won’t be just that people provide a predictive coding solution; they will also provide a work flow around the solution and a UI around the solution, as well as a method, a process, testing and even certification. So, what will happen is that the trend will still be technology assisted review and predictive coding and analytics, just that it won’t be so “bleeding edge”. The key is presentation of data such that it helps attorneys get through the data in a smarter way – not necessarily just culling, but understanding the data that you have and how to get through it faster and more accurately. I think that the delivery of those approaches through solution providers, software providers and even service providers seems to be more mature and more focused. Now, there is an actual tangible “thing” that I can touch that shows it is not just a bullet point – “Hey, we do predictive coding!” – instead, there is actually a method in which it is deployed to you, and to your case or your matter.

What are you working on that you’d like our readers to know about?

BIA is really redefining eDiscovery with respect to how the corporate customer looks at it. How does the corporation look at eDiscovery? They look at it as part of information security and information management and we find that IT departments are very much more involved in the decision making process. Having information security roots, BIA is leveraging our preservation technology and bringing in an eDiscovery tool kit and platform that a company can use that will get them where they need to be with respect to compliance, defensibility and efficiency. We also have the only license model for eDiscovery in the business with respect to the kind of corporate license model, the per seat model that we offer. We are saying “look, we have been doing this for 10 years and we know exactly what we are doing”. We use cutting edge technology and while other cloud providers have claimed that they are leveraging utility computing, we are not only saying that, we are actually doing it. If you don’t believe us, check it out and bring your best technology people and they will see we are telling the truth on that. We are leveraging our technology for what happens from the corporate perspective.

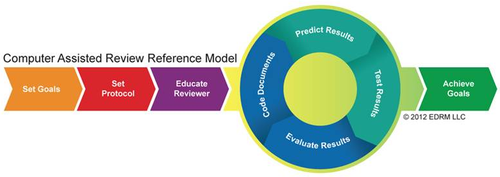

We are not a review tool and you cannot produce documents out of our software, but that is why clients have software products like OnDemand®; with it, they can do all the different types of review they want and batch it out and use 100 reviewers or 10 reviewers or whatever. BIA supports the corporations who care about legal hold and preservation and collections and insuring that they are not sending millions of gigs over for costly review. We support from the corporate perspective, whether you want to call it on the left side of the EDRM model or not, what the GC needs. GCs want to make sure that they have not deleted some piece of data that will be needed in court. Notifying clients of that requirement, taking a “snap shot” of that data, locking it down, collecting that data and then insuring that our clients are following the right work flow is basically what we bring to the table. We have also automated about 80% of the manual tasks with TotalDiscovery, which makes the GC happy and brings that protection to the organization at the right price. Between TotalDiscovery and a review application like OnDemand, you don’t need anything else. You don’t need twenty applications for a full solution – two applications are all you need.

Thanks, Alon, for participating in the interview!

And to the readers, as always, please share any comments you might have or if you’d like to know more about a particular topic!

Disclaimer: The views represented herein are exclusively the views of the author, and do not necessarily represent the views held by CloudNine Discovery. eDiscoveryDaily is made available by CloudNine Discovery solely for educational purposes to provide general information about general eDiscovery principles and not to provide specific legal advice applicable to any particular circumstance. eDiscoveryDaily should not be used as a substitute for competent legal advice from a lawyer you have retained and who has agreed to represent you.