What is “Reduping?” – eDiscovery Explained

We’ve talked about “reduping” before, but since this question came up with a client recently, I thought it was worth revisiting.

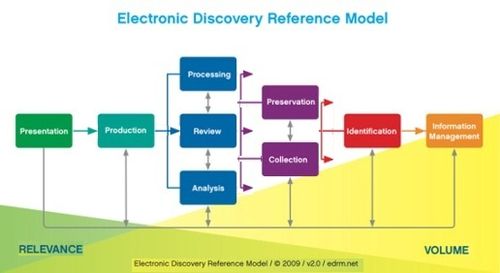

As emails are sent out to multiple custodians, deduplication (or “deduping”) has become a common practice to eliminate multiple copies of the same email or file from the review collection, saving considerable review costs and ensuring consistency by not having different reviewers apply different responsiveness or privilege determinations to the same file (e.g., one copy of a file designated as privileged while the other is not may cause a privileged file to slip into the production set). Deduping can be performed either across custodians in a case or within each custodian.

Everyone who works in electronic discovery knows what “deduping” is. But how many of you know what “reduping” is? Here’s the answer:

“Reduping” is the process of re-introducing duplicates back into the population for production after completing review. There are a couple of reasons why a producing party may want to “redupe” the collection after review:

- Deduping Not Requested by Receiving Party: As opposing parties in many cases still don’t conduct a meet and confer or discuss specifications for production, they may not have discussed whether or not to include duplicates in the production set. In those cases, the producing party may choose to produce the duplicates, giving the receiving party more files to review and driving up their costs (yes, it still happens). The attitude of the producing party can be “hey, they didn’t specify, so we’ll give them more than they asked for.”

- Receiving Party May Want to See Who Has Copies of Specific Files: Sometimes, the receiving party does request that “dupes” are identified, but only within custodians, not across them. In those cases, it’s because they want to see who had a copy of a specific email or file. However, the producing party still doesn’t want to review the duplicates (because of increasing costs and the possibility of inconsistent designations), so they review a deduped collection and then redupe after review is complete.

As a receiving party, you’ll want to specifically address how dupes should be handled during production to ensure that you don’t receive duplicate files that provide no value.

Many review applications support the capability for reduping. For example, CloudNine Discovery‘s review tool (shameless plug warning!) OnDemand®, enables duplicates to be suppressed from review, but then enables the same tags to be applied to the duplicates of any files tagged during review. When it’s time to export documents for production, the user can decide at that time whether or not to export the dupes as part of that production.

So, what do you think? Do any of your cases include “reduping” as part of production? Please share any comments you might have or if you’d like to know more about a particular topic.

Disclaimer: The views represented herein are exclusively the views of the author, and do not necessarily represent the views held by CloudNine Discovery. eDiscoveryDaily is made available by CloudNine Discovery solely for educational purposes to provide general information about general eDiscovery principles and not to provide specific legal advice applicable to any particular circumstance. eDiscoveryDaily should not be used as a substitute for competent legal advice from a lawyer you have retained and who has agreed to represent you.